AI Time Horizons Are Growing Exponentially

Tasks, Time, and Tomorrow.

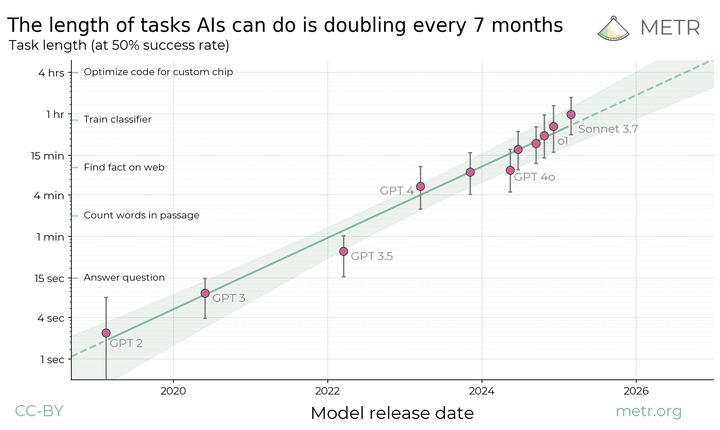

A recent paper by researchers at METR found the length of tasks that AI agents can complete — their time horizon — has been consistently growing exponentially over the last 6 years, doubling every 7 months.

What does this actually mean?

The research methodology is quite simple. In short, the researchers got skilled humans and AI systems to attempt to perform tasks under similar conditions. They then measured how long it took humans to perform the tasks, and how the success rate of the AIs varied depending on how long it took the humans to perform the tasks.

Which tasks did they measure performance on?

The tasks they used for testing were largely from the RE-Bench and HCAST benchmarks.

We discussed RE-Bench a couple of weeks ago in our article “From Intelligence Explosion to Extinction”, and how it was evident from results on that benchmark that AI time horizons were increasing.

The important thing about RE-Bench is that it measures and compares the performance of humans and frontier AIs on AI research and engineering tasks. This is a particularly relevant domain, as when AIs are able to perform on the level of skilled humans in AI R&D, it is plausible that an intelligence explosion could be initiated, where AI development is driven by AIs improving AIs, at an accelerating rate much faster than human-driven AI development. We could quickly end up with AI systems vastly more intelligent than humans, that we have no way to ensure are safe or controllable, and the entire process would occur so rapidly that it would likely be impossible for us to have any reasonable degree of oversight or control over it.

RE-Bench doesn’t measure all the tasks that go into AI R&D, it is focused on engineering, so saturation on this benchmark would not automatically mean an intelligence explosion would be possible, but it could serve as a leading indicator.

HCAST is a more diverse benchmark, and also includes tasks relating to cybersecurity and general reasoning.

Some commentators have suggested that these results might not generalize to other types of tasks, including real-world ones. The paper authors do acknowledge this, but they also ran experiments on a different benchmark, SWE-bench Verified, which measures the ability of AIs to perform software engineering tasks, and found a similar trend there.

Notably, the observed doubling time they found on those tasks was much shorter, suggesting a much faster growing exponential trajectory than the 7 months headline result.

Why does it matter that AI task horizons are growing exponentially?

How long it takes a skilled human to perform a task seems like a useful way of gauging the difficulty of a problem. If we define intelligence as the ability to solve problems (a common definition), then measuring task horizons could be a useful proxy for the measurement of intelligence.

But the really important observation here is that task horizons are growing on an exponential. Every 7 months, AIs can reliably complete tasks that take twice as long. Today, Claude Sonnet 3.7 can complete tasks that take humans an hour, with a 50% success rate. In 7 months, if this trend holds, AIs will be able to complete tasks that take 2 hours, and in 7 more months they’ll have a task horizon of 4 hours.

Exponentials are notoriously difficult for humans to form good intuitions about without actually doing the modeling. A number that seems small and insignificant one day, will over a short period of time grow to be huge and world-changing another day.

This paper serves as a clear piece of evidence that AI capabilities are indeed growing exponentially, and this fact poses a grave threat to our species.

That’s because we have no way to ensure that AI systems more powerful than ourselves are safe, and we are on a trajectory where AIs do in fact rapidly surpass us.

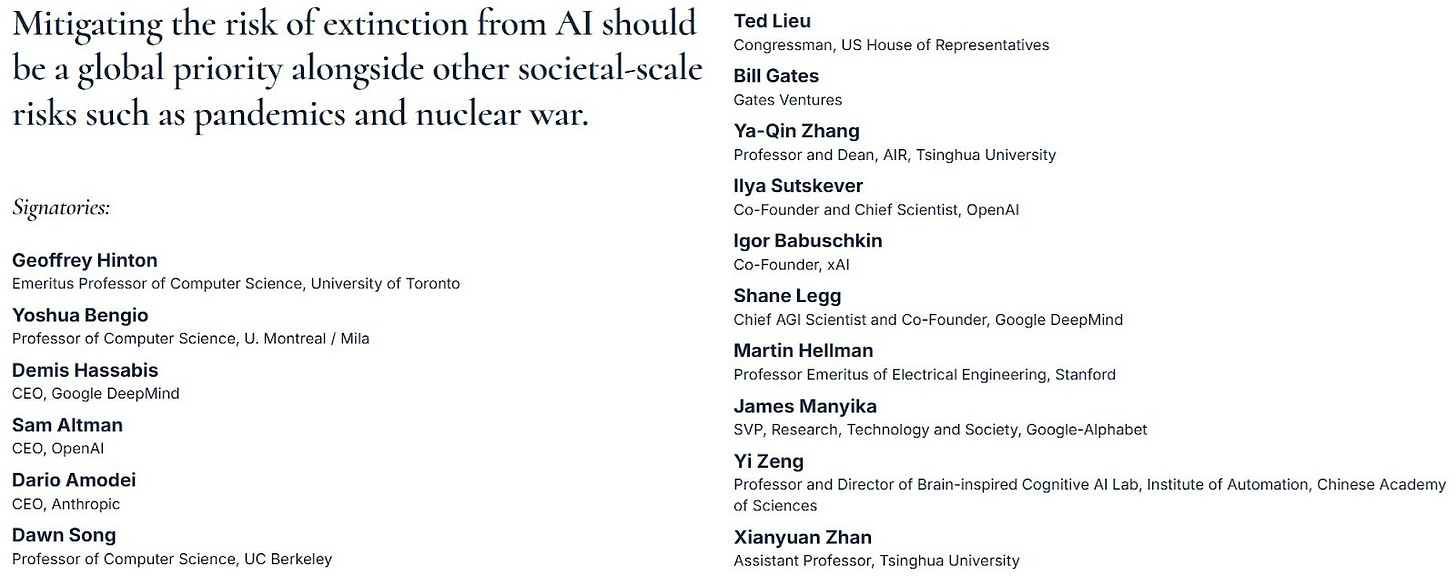

Nobel Prize winners, hundreds of top AI scientists, and even the CEOs of the biggest AI companies have warned that AI poses an extinction threat to humanity.

Exponentials are particularly difficult to react to, as Connor Leahy notes, “There are only two times you can react to an exponential: Too early, or too late.”

Is it really an exponential?

The authors of the paper give straightforward reasons for fitting an exponential curve on the data: “the fit is very good”:

And indeed, we can see that when plotted on a logarithmic scale, a straight line trend is clearly visible. A straight line on a logarithmic scale is equivalent to an exponential on a linear scale.

However, some researchers have questioned whether it really is an exponential. Daniel Kokotajlo suggests it could be a superexponential, adding that this is mostly for theoretical reasons.

A superexponential trend would of course mean that we are in an even worse situation with respect to our ability to anticipate and react to AI capability gains than with an exponential trend — which is already very difficult.

Others have pointed to the increasing gradient towards the end of the graph indicating an accelerated growth regime.

Whatever the case may be, it is clear that a decent approximation of current data is that AI task horizons are growing exponentially — and this is a very dangerous situation for us to be in.

Future projections

If we take this trend at face value, it implies vast change in the near future, with the authors of the paper writing:

Naively extrapolating the trend in horizon length implies that AI will reach a time horizon of >1 month (167 work hours) between late 2028 and early 2031.

Of course, considering the possibility of month-long tasks may strain intuitions about what constitutes a task, but if it is defined as simply something that takes a human a month to do, it might make sense.

What if the measurements are wrong? Well, it doesn’t make a huge difference to the overall conclusions:

Even if the absolute measurements are off by a factor of 10, the trend predicts that in under a decade we will see AI agents that can independently complete a large fraction of software tasks that currently take humans days or weeks.

And if it ends up being superexponential, this could happen much sooner.

If you’re interested in where AI could take us this year and beyond check out our article on AI predictions for 2025.

Are you in London? You should come join us on Friday, March 28th, for an evening of drinks, food, and conversation! This gathering welcomes everyone — from AI specialists to tech journalists to policy wonks.

Plus ones are very welcome, but space is limited, so RSVP now! You can request to attend here: https://lu.ma/lumg3dgx

See you next week!

Tolga Bilge, Eleanor Gunapala, Andrea Miotti

I’ve seen a lot of coverage of this piece of research, but what I fail to understand is how on the one hand we’re suppose to believe task horizons are growing exponentially, and at the same time AI can’t beat Pokémon?

A 50% success rate is extremely modest to put it mildly: In software engineering, cybersecurity, or research, a 50% chance of success on a task with no real-time oversight is practically unusable. At best, it points to "potential assistance," not autonomy. Also the paper engages in naive extrapolation, assuming that exponential improvement continues indefinitely. Historical technology trends mostly follow logistic (S-curve) progressions. Constraints like computational resources, training data quality, and architectural bottlenecks are not addressed. Furthermore, "tasks that take a month" are ill-defined. Human tasks of this length often involve planning, communication, and multi-step dependencies that are not easily compressible into LLM inference. In short othing is growing indefinitely exponentially: https://theafh.substack.com/p/the-last-day-on-the-lake?r=42gt5