AI Tracker #5: Even Mr Beast Isn't Safe

Even Mr Beast Isn’t Safe

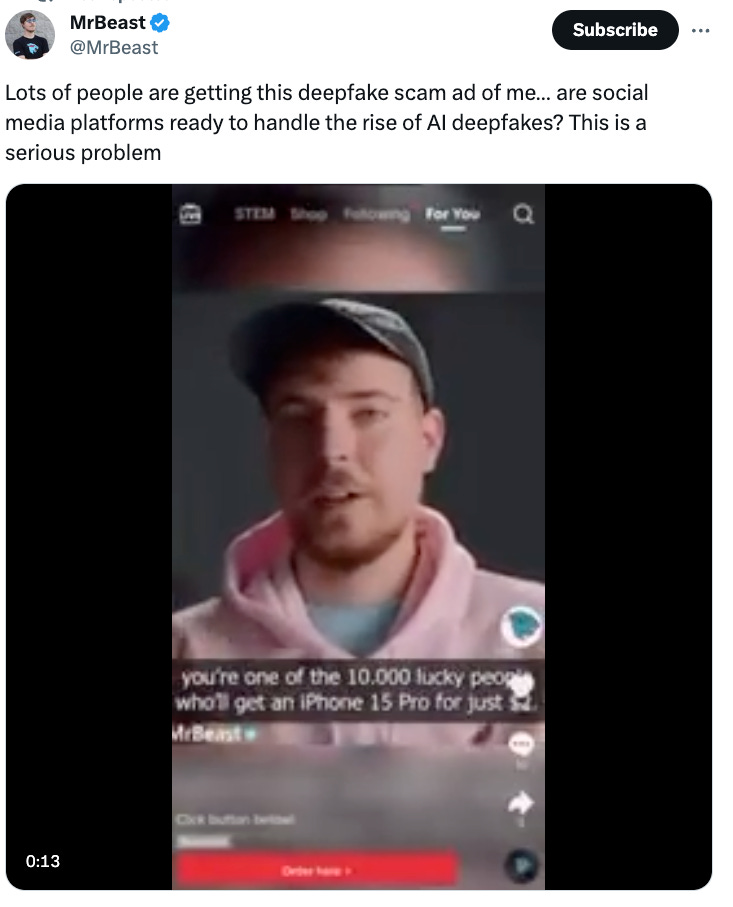

YouTube’s 191 million subscriber prodigal son, Mr Beast, has had a deepfake ad made of him offering “the world’s largest iPhone 15 giveaway.” Mr Beast had the following to say on Twitter: ‘Lots of people are getting this deepfake scam ad of me… are social media platforms ready to handle the rise of AI deepfakes? This is a serious problem’.

Is AI Safety Tractable?

Liron Shapira lists ways to get to what he calls ‘frame controlling':

‘1. PREACH THE BENEFITS OF SAFE AI Efficiency! Education! Economic growth! No more labor shortage! Cure cancer & aging! [Psst… if AI safety is intractable on a 5-10 year timeline, it doesn't matter how good a safe AI is…]

‘2. PREACH ABOUT “EMPIRICISM”

‘3. PREACH ABOUT GETTING “CLOSE TO THE DETAILS” What if OpenAI is violating safety principles they don't understand, and virtually guaranteed to fail at safety? [Psst... It doesn't help to get closer to the details of an obviously unsafe design…]

‘4. PREACH ABOUT RAPID ITERATION It's okay if you have ad-hoc security, because new tech development always needs to iterate!’

To which Holly Elmore tweeted the following:

We Don’t Yet Understand

Liron Shapira also posted a video to Twitter of a clip of Sam Altman says in an interview. the OpenAI CEO says of his models that: “When/why a new capability emerges… we don't yet understand."

Having seen the video, Liron replied at the end of the tweet: ‘In other words, we hold our breath and pray our next model comes out just right: more capable than the last one, but not an ASI that goes rogue. How is it legal to operate like this?’

Would You Risk It

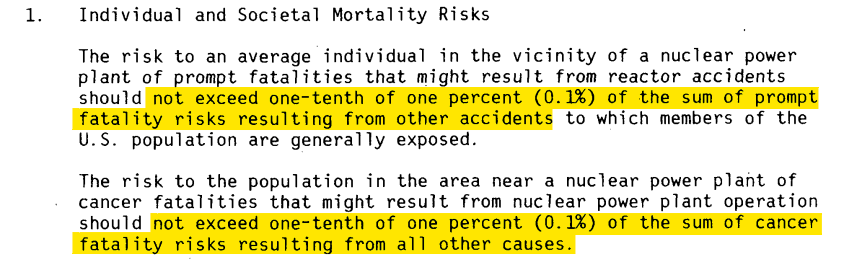

AI Tracker constant, Simeon, tweets about risk compliance with regards to nuclear.

‘Here's what it looks like to define a risk criteria that engineers must manage to comply with when they develop their system.

‘This is a doc from the Nuclear Regulatory Commission published in 1983 that contains: ‘1) the position and preferred thresholds of different actors (e.g. industry, the general public etc.) ‘2) the rationale for this number rather than any other number It is a pretty solid baseline that I hope we'll be able to beat for AI, the most transformative technology we ever created.’

Recursive Self-Improvement

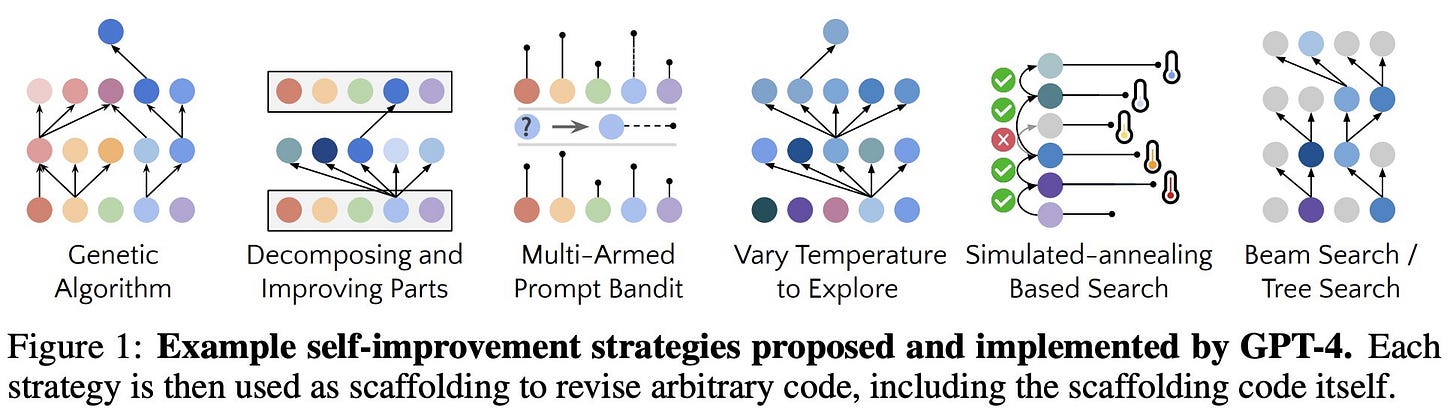

AInotkilleveryoneism is back. And this time, their tweet is about recursive self-improvement. They had the following to say about this new tweet thread about a new paper on the topic:

So it begins: “This paper reveals a powerful new capability of LLMs: recursive self-improvement”

-

“There’s 0 evidence foom (fast takeoff) is possible”

“Actually, ‘fast takeoffs’ happen often! Minecraft, Chess, Shogi... Like, an AI became ‘superintelligent’ at Go in just 3 days”

“Explain?”

“It learned how to play on its own - just by playing against itself, for millions of games. In just 3 days, it accumulated thousands of years of human knowledge at Go - without being taught by humans!

“It far surpassed humans. It discovered new knowledge, invented creative new strategies, etc. Many confidently declared this was impossible. They were wrong.”

“Well, becoming superhuman at Go is different from superhuman at everything”

“Yes, but there are many examples. It only took 4 hours for AlphaZero to surpass the best chess AI (far better than humans), 2 hours for Shogi, weeks for Minecraft (!), etc.”

“But those are games, not real life”

“AIs are rapidly becoming superhuman at skill after skill in real life too. Sometimes they learn these skills in just hours to days. They’re leaving humans in the dust.

“We really could see an intelligence explosion where AI takes over the world in days to weeks. Foom isn’t guaranteed but it’s possible.

“We need to pause the giant training runs because each one is Russian Roulette.”

* * *

If we miss something good, let us know: send us tips here. Thanks for reading AGI Safety Weekly! Subscribe for free to receive new AI Tracker posts every Friday.