Artificial Guarantees 2: Judgment Day

The future is not set, nor are commitments made by AI companies.

Last month we announced (Substack) a new project, Artificial Guarantees, a collection of inconsistent statements, baseline-shifting tactics, and promises broken by major AI companies and their leaders showing that what they say doesn't always match what they do.

We’ve been continuing to collect examples of this type of behavior, and below have a new selection of interesting cases to share with you. You can get our full list at controlai.com/artificial-guarantees

Do you have an example that we missed? Join our Discord to add to the collection.

What They Say:

OpenAI is cautious with the creation and deployment of their models

What They Do:

OpenAI is “pulling up” releases in response to competition

February 24 2023 - OpenAI

In a 2023 blogpost, Sam Altman wrote that OpenAI is becoming increasingly cautious with the creation and deployment of their models, and that if deployments had the effect of accelerating an unsafe AI race, OpenAI might significantly change their plans around continuous deployment, implying they might slow or halt deployments of their AI systems.

Continuous deployment is OpenAI’s idea of continuously deploying more powerful models, ostensibly in order to prepare society for the effects of AI. Some might compare this strategy to boiling a frog, or note that OpenAI are anyway incentivized to do this to help secure investments.

January 27 2025 - Sam Altman (Twitter)

In response to the release of DeepSeek’s R1, a Chinese AI model comparable to OpenAI’s recently deployed o1, Sam Altman welcomed the competition, saying OpenAI would “pull up some releases”:

January 31 2025 - OpenAI

OpenAI then deployed o3-mini a few days later: “the newest, most cost-efficient model in our reasoning series, available in both ChatGPT and the API today. Previewed in December 2024, this powerful and fast model advances the boundaries of what small models can achieve”.

February 2 2025 - OpenAI

And days later deployed Deep Research, currently available only on OpenAI’s $200/month Pro subscription tier: “a new agentic capability that conducts multi-step research on the internet for complex tasks. It accomplishes in tens of minutes what would take a human many hours.”

Deep Research was deployed without releasing a system card, neither for Deep Research nor for the full version of o3 upon which it is based. OpenAI say that o3 and Deep Research have been safety tested, and that they will share more details when access is widened to the ChatGPT Plus subscription tier.

What They Say:

Dario Amodei warns of the dangers of US-China AI racing

What they Do:

Dario Amodei proposes a dangerous race, advocating the use of recursive self-improvement

November 2017 - Dario Amodei (AI and Global Security Summit)

Dario Amodei warned of the dangers of US-China AI racing, saying “that can create the perfect storm for safety catastrophes to happen”.

Full video:

October 2024 - Dario Amodei

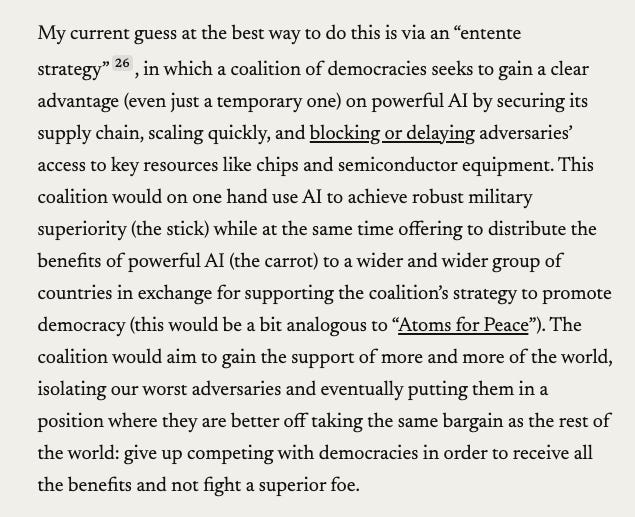

Anthropic CEO Dario Amodei argues in his “Machines of Loving Grace” essay that an “entente” of democracies should race ahead on AI development to gain a strategic advantage:

January 2025 - Dario Amodei

In Amodei’s essay “On DeepSeek and Export Controls”, he openly calls for the use of recursive self-improvement, an incredibly dangerous process to initiate that could result in human extinction, in order for the US and allies to “take a commanding and long-lasting lead on the global stage”.

What They Say:

Sam Altman argues that building AGI fast is safer, because it avoids a compute overhang

What they Do:

OpenAI announces Stargate, a $500 billion AI infrastructure project, and Sam Altman says “we just need moar compute”

February 24 2023 - OpenAI

In a 2023 blogpost, Sam Altman argued that building AGI fast is safer, because there isn’t too much compute around (a compute overhang).

This refers to the idea that if there is a large compute overhang when AGI is first built, excess compute resources will be able to be used to rapidly improve it, leading to very dangerous outcomes.

January 21 2025 - OpenAI

OpenAI announces Stargate, “a new company which intends to invest $500 billion over the next four years building new AI infrastructure for OpenAI in the United States.“

January 21 2025 - Sam Altman (Twitter)

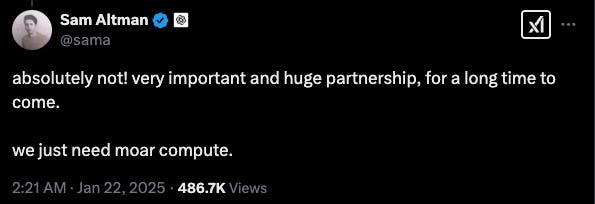

Sam Altman says “we just need moar compute.”

What They Say:

Dario Amodei says that governments around the world should create testing and auditing regimes

What They Do:

Anthropic lobbies against testing and auditing proposed by California regulators

1 November 2023 - Anthropic

Anthropic's CEO Dario Amodei, speaks at the UK AI Safety Summit. At the event he states that "accountability is necessary" and "as risk increases, we expect that stronger forms of accountability will be necessary." He also calls on governments around the world to create "well-crafted testing and auditing regimes with accountability and oversight".

23 July 2024 - Axios

Anthropic refuses to support proposed legislation (SB 1047) in California which would enforce AI safety standards and without significant amendments

What They Say:

Anthropic will not work to advance state-of-the-art capabilities

What They Do:

Releases Claude 3.5 Sonnet and states that it “raises the industry bar for intelligence, outperforming competitor models… on a wide range of evaluations”.

8 March 2023 - Anthropic

In a blog post on AI safety, Anthropic claim they "do not wish to advance the rate of AI capabilities progress"

4 March 2024 - Anthropic

Anthropic announces Claude 3 model family which includes "three state-of-the-art models"

In a blog post Anthropic claims that "Claude 3.5 Sonnet sets new industry benchmarks for graduate-level reasoning (GPQA), undergraduate-level knowledge (MMLU), and coding proficiency (HumanEval). It shows marked improvement in grasping nuance, humor, and complex instructions, and is exceptional at writing high-quality content with a natural, relatable tone."

Thank you for reading our Substack. If you liked this post, we’d encourage you to restack it, or forward the email to others you think might be interested, or share it on social media.

Last week, we launched a new campaign, bringing together UK politicians in a call for binding regulations on powerful AI, acknowledging the AI risk of extinction to humanity.

Take action: if you live in the UK you can use our tool here to email your MP about signing our campaign statement — it takes less than a minute.

Artificial Guarantees is an ongoing project. Due to the sheer volume of these inconsistencies, and the speed at which things are moving, we haven’t been able to build an exhaustive list. So we want your help! If you have a tip for us, join our Discord, we’d love to hear from you!

See you next week!

Eleanor Gunapala , Tolga Bilge, Andrea Miotti

The U.S.'s current "entente strategy":

1. Race as fast as possible to the moment before AGI;

2. Correctly anticipate when the moment just before AGI occurs;

3. Stop on a dime at the precipice just before AGI occurs;

4. Try to solve alignment using whatever temporal lead the U.S. has in the race by uploading AI researchers minds into the machine and then running centuries of simulated scientific method in the few weeks/months the U.S. remains in the lead.

5. ????????

6a. If alignment is impossible or if we fail to correctly solve alignment in time, all biological life on Earth is eliminated by misaligned AGI in 98% of possible futures.

6b. If alignment is possible and we solve solve alignment in time, the U.S. will use aligned AGI as a superweapon to forcibly establish global hegemony in 1% of possible futures.

6c. If alignment is impossible and we realize it in time to stop the race, humanity decides to limit progress to strong narrow AI and a global ban on developing AGI is successfully enforced in 1% of possible futures.

If this grand strategy sounds retarded to you, it is because you have common sense.

The entente strategy maximizes the possibility of a misaligned AGI (by not allowing enough time for scientific method to find a certain solution to alignment) and and minimizes the possibility of preventing a lone wolf from aligning to their specific intent (by allowing privacte actors to not only participate in the race, but to lead it). The entente strategy is the worst of both worlds and is fatally flawed. As a result, we are racing off a cliff into mutually assured extinction.

The entente strategy presumes the race-risk dynamic is an inescapable fact of reality. There are other viable, less-existentially-risky strategies, such as a global moratorium on private frontier AI research; global cooperation and joint control over government frontier research; and universally assured destruction against all entities that attempt to cheat.

We need you in the U.K. to create friction and opposition to the current U.S. grand strategy, so that we here in the U.S. can try to get the entente strategy reversed before we fly off the cliff.

Probably worth mentioning that Anthropic did ultimately support SB 1047. Given their statements I don’t think it’s fair to hold them to a standard of “support every AI regulation bill regardless of its merits”