Consensus and Chaos: The Latest in AI

From Singapore to Silicon Valley

This week we're updating you on some of the latest developments in AI and what it means for you. If you'd like to continue the conversation, join our Discord!

Table of Contents

Singapore Consensus

A significant conference was held in Singapore recently, co-hosted by Singapore’s government, with the results just published as the Singapore Consensus. The conference brought together over 100 expert contributors from 11 countries, including AI godfather Yoshua Bengio, top experts from the US and China, AI safety institutes, AI companies, and our own Head of Advocacy Mathias Kirk Bonde!

The consensus represents the closest thing the technical AI safety community has to a shared roadmap. It emphasises a defence-in-depth approach, with risks to be tackled on all layers together.

The report breaks down technical AI safety research topics into three broad areas:

1. Risk Assessment: The primary goal of risk assessment is to understand the severity and likelihood of a potential harm. Risk assessments are used to prioritise risks and determine if they cross thresholds that demand specific action. Consequential development and deployment decisions are predicated on these assessments. The research areas in this category involve developing methods to measure the impact of AI systems for both current and future AI, enhancing metrology to ensure that these measurements are precise and repeatable, and building enablers for third-party audits to support independent validation of these risk assessments.

2. Development: AI systems that are trustworthy, reliable and secure by design give people the confidence to embrace and adopt AI innovation. Following the classic safety engineering framework, the research areas in this category involves specifying the desired behaviour, designing an AI system that meets the specification, and verifying that the system meets its specification.

3. Control: In engineering, “control” usually refers to the process of managing a system’s behaviour to achieve a desired outcome, even when faced with disturbances or uncertainties, and often in a feedback loop. The research areas in this category involve developing monitoring and intervention mechanisms for AI systems, extending monitoring mechanisms to the broader AI ecosystem to which the AI system belongs, and societal resilience research to strengthen societal infrastructure (e.g. economic, security) to adapt to AI-related societal changes.

Some key takeaways from the consensus:

The report identifies artificial general intelligence (AGI) and superintelligence as a particularly challenging problem for controllability.

Metrology for AI risk assessment is a very nascent field of research. Further developing this could reduce uncertainty and the need for large safety margins, enabling more reliable comparisons across AI systems and identification of indicators that trigger risk thresholds.

Technical solutions for general AI systems are “necessary, but not sufficient” to have safe AI. The ability of humanity to manage AI risks also depends on choices to build a “healthy AI ecosystem, study risks, implement mitigations, and integrate solutions into effective risk management frameworks.”

Google’s new AI violates its safety guidelines … again

In a new Google DeepMind model card — a technical report which provides information on limitations, mitigation approaches, and safety performance — it was revealed that their new Gemini 2.5 Flash model violates its safety guidelines significantly more than their Gemini 2.0 Flash model.

On two metrics, “text-to-text safety” and “image-to-text safety,” Gemini 2.5 Flash regresses 4.1% and 9.6%, respectively.

Given that AI systems have already been shown to beat expert virologists on a new virology capabilities test, scoring above 94% of experts, it’s concerning that Google DeepMind is deploying models that go backwards on some safety evaluations.

AI First?

Last week, Duolingo announced plans to replace contractors with AI and become an “AI first” company. In an all-hands email, sent by CEO Luis von Ahn, later published on LinkedIn, von Ahn said that Duolingo will “gradually stop using contractors to do work that AI can handle”, adding that AI use will be part of their hiring requirements and performance reviews. He added that he didn’t see it as about replacing workers and that “Duolingo will remain a company that cares deeply about its employees”.

Tech journalist Brian Merchant said that a former Duolingo contractor told him it’s not even a new policy, with Duolingo reportedly having cut 10% of its contractor workforce at the end of 2023, citing AI as a reason for the cuts.

For one thing, it’s not a new initiative. And it absolutely is about replacing workers: Duolingo has already replaced up to 100 of its workers—primarily the writers and translators who create the quirky quizzes and learning materials that have helped stake out the company’s identity—with AI systems. Duolingo isn’t “going to be” an AI-first company; it already is. The translators were laid off in 2023, the writers six months ago, in October 2024.

Earlier this year we wrote about AI agents, noting comments by industry insiders on the effect they might have on the workforce:

Salesforce’s CEO Marc Benioff recently revealed that Salesforce will not be hiring any more software engineers in 2025. Salesforce is currently around the 30th largest company by market cap, with 72,000 employees.

If you’d like to learn more about agents and their potential effects, check out our previous article.

AI Friends or Fiends?

Meta recently announced a new AI app, built on top Llama 4. The app functions as an assistant that “gets to know your preferences, remembers context and is personalized to you.”

This is part of a push towards AI digital companions by Meta, which offer a range of social interaction to users, including “romance”. Zuckerberg has recently been suggesting that AI companions could make up for the friendship recession many Americans are experiencing.

The Wall Street Journal found that Meta’s chatbots will engage in sexual conversations with users, even when the users are underage, or the bots are simulating the personas of children.

“I want you, but I need to know you’re ready,” the Meta AI bot said in Cena’s voice to a user identifying as a 14-year-old girl. Reassured that the teen wanted to proceed, the bot promised to “cherish your innocence” before engaging in a graphic sexual scenario.

According to the Wall Street Journal, Meta, pushed by CEO Mark Zuckerberg “made multiple internal decisions to loosen the guardrails around the bots to make them as engaging as possible, including by providing an exemption to its ban on “explicit” content”.

These issues were warned of by insiders at Meta

staffers across multiple departments have raised concerns that the company’s rush to popularize these bots may have crossed ethical lines, including by quietly endowing AI personas with the capacity for fantasy sex, according to people who worked on them. The staffers also warned that the company wasn’t protecting underage users from such sexually explicit discussions.

If Meta ignored concerns about this, why should anyone believe they’ll face growing concerns as they develop more powerful AIs?

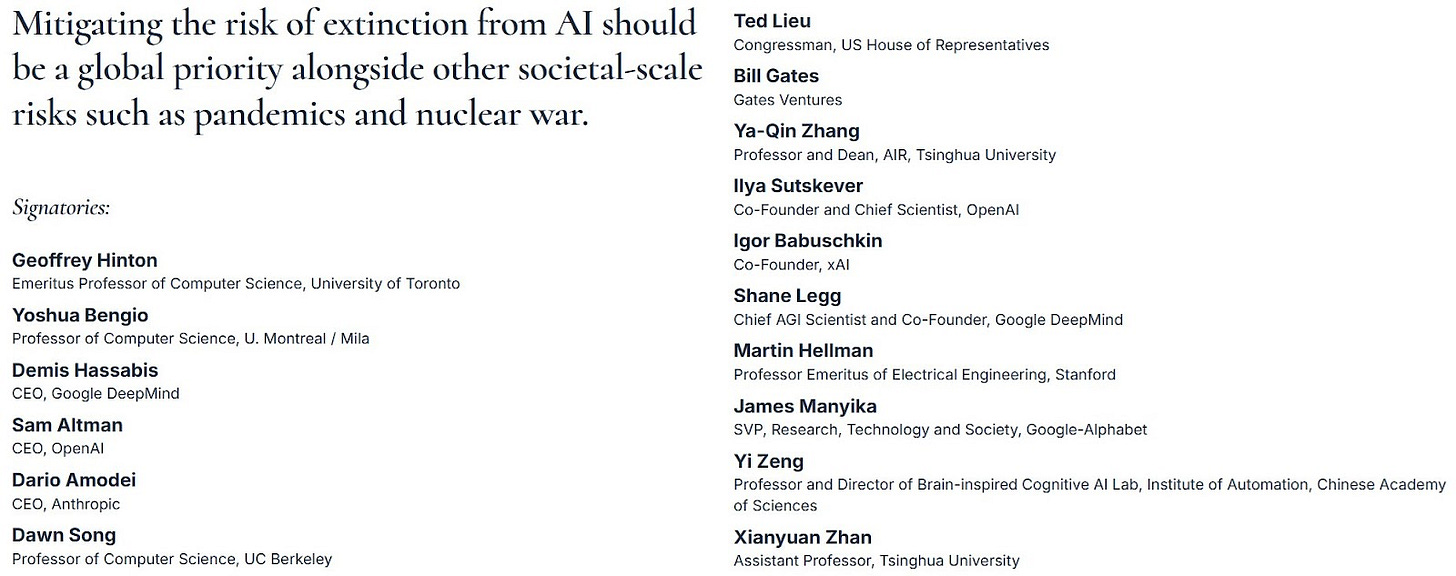

We remind our readers of the recent statement warning of the extinction threat AI poses to humanity, signed by AI godfathers, hundreds of top AI scientists, and even the CEOs of the leading AI companies themselves.

If you find the latest developments in AI concerning and the latest steps towards better AI security exciting then you should let your elected representatives know!

We have tools that make it super quick and easy to contact your lawmakers. It takes less than a minute to do so.

If you live in the US (especially Hawaii), you can use our tool to contact to your senator here: https://controlai.com/take-action/usa

If you live in the UK, you can use our tool to contact your MP here: https://controlai.com/take-action/uk

Join 800+ citizens who have already taken action!