Current AI Policies Won't Be Enough To Stop Deepfakes

Deepfake abuses will proliferate until we can regulate the whole supply chain

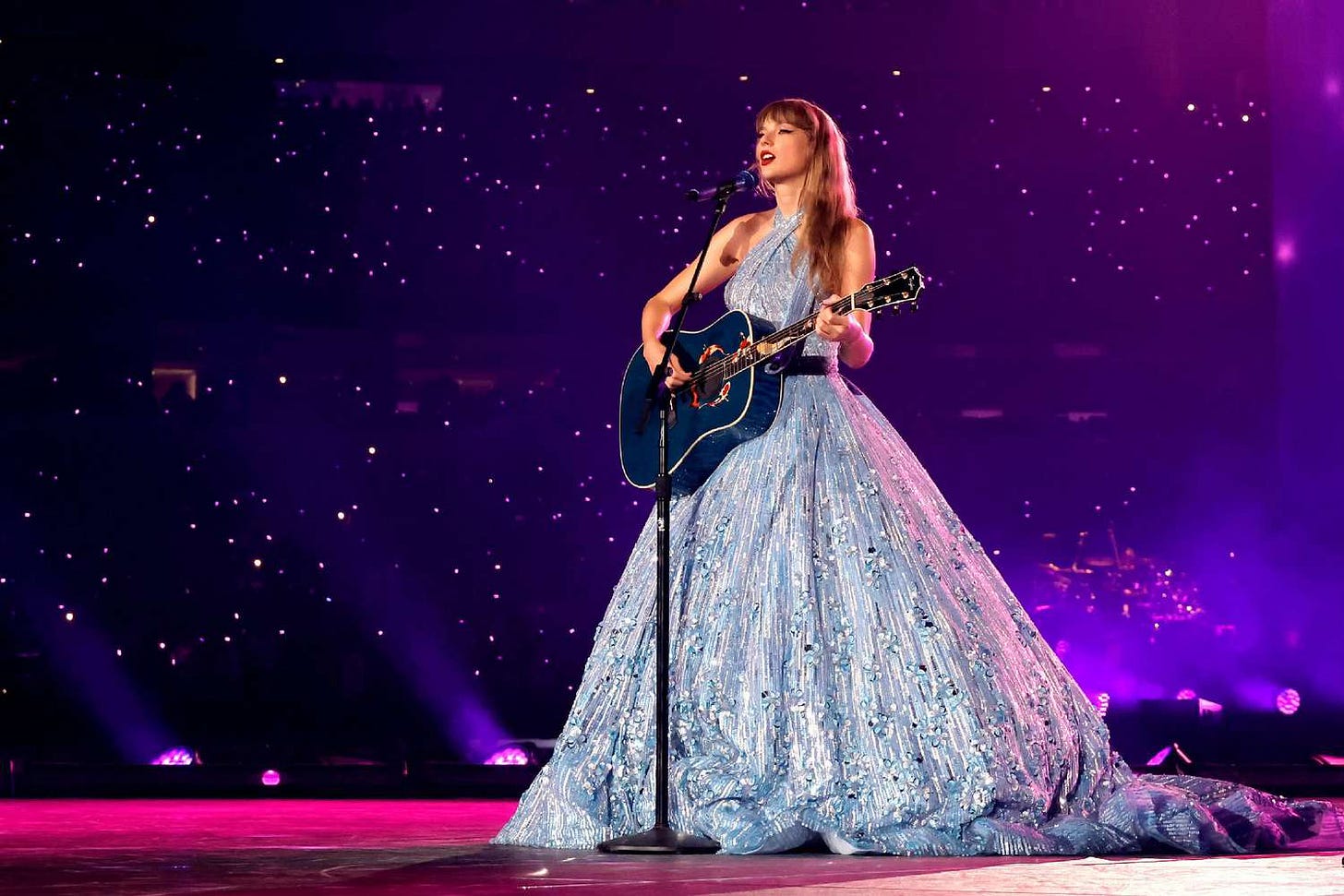

The circulation of explicit deepfake images of Taylor Swift on the internet yesterday has revealed how decentralised, accessible and uncontrolled deepfake technology is. It also revealed how ineffective content moderation by online platforms will be to stop the proliferation of this kind of content. Websites have quickly purged the images from their platforms - but even the narrow window of time during which the images circulated online was likely long enough to enable the continued spread of the images.

Unsurprisingly, reports are now stating that Swift is considering legal action in the response to the spread of the images online. But what legal tools would Swift actually have at her disposal?

There are currently multiple legislative attempts afoot on both sides of the Atlantic to criminalise sexually explicit deepfakes. In the United States, Congressman Joseph Morelle has sponsored the Preventing Deepfakes of Intimate Images Act. This bill would make it a crime to ‘intentionally disclose’ pornographic deepfakes images of someone. The Preventing Deepfakes of Intimate Images Act inherits its definition of disclosure from the 2022 Violence Against Women Act - and this definition of disclosure would encompass not just the sharing of sexually explicit deepfake images, but also the hosting of such images by online platforms.

On the other side of the Atlantic, anti-deepfake legislation has pursued similar means of attempting to ban deepfakes. The 2023 Online Safety Bill made the sharing of sexually explicit deepfake images a criminal offence. Fearful that that legislation would be insufficient to mitigate the threat of deepfakes, MPs Jess Phillips and Alex Norris attempted to add amendments to the 2024 Criminal Justice Bill that would criminalise both the hosting and the creation of pornographic deepfake content. But Phillips and Norris have been encouraged to abandon these amendments on the grounds that further legislation would be superfluous.

But legislation that punishes only the publication and distribution of deepfake content will not be able to meaningfully dam the oncoming tidal wave of deepfakes. Legislation that doesn’t seek to prevent the very creation of deepfakes will be essentially gutless.

One reason why this is the case is that the very creation of deepfake content is definitionally and inherently non-consensual. This is where the ‘revenge porn’ framework is inadequate to the task of prohibiting deepfakes. With ‘revenge porn’, the initial creation and possession of the intimate images is presumed to be private and consensual; it is the sharing of the content with third parties that is non-consensual and a violation of privacy. In the case of Taylor Swift, however, the sexually explicit deepfakes had already been created without her consent even when they were only for the private consumption of one individual user.

But a more fundamental issue is the widespread availability of deepfake technology and the sheer ease with which deepfakes can be created. Even the swiftest content moderation enforcement by online platforms will do nothing to diminish the accessibility of the software used to create these deepfakes. The software programmes used to create deepfakes are a brief Google search away. They require no coding or programming ability on behalf of the user. In order to make a deepfake video of a particular individual, you only require about 10 seconds worth of footage of the person in question.

If public policy is to meaningfully confront the threat of deepfakes, it will need to regulate the entire supply chain of the technology. Until legislation can prevent deepfake creation itself, Taylor Swift and many others will be vulnerable to future deepfake abuses. The only reliable way to prevent the deepfakes from causing more harm is to control deepfake technology itself.

Hello I made a petition online how do I get politicians to create a law?