Welcome to the ControlAI newsletter! This week we have another round of updates for you, covering Zuckerberg’s new superintelligence agenda, a drive for cooperation on AI, tech lobbying, and more.

To continue the conversation, join our Discord. If you’re concerned about the threat posed by AI and want to do something about it, we invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

Table of Contents

Personal Superintelligence

In a new blog post titled “Personal Superintelligence”, Mark Zuckerberg says AI systems are starting to improve themselves, and superintelligence is now in sight.

Zuckerberg outlines what he describes as Meta’s vision to bring “personal” superintelligence to everyone. He claims that there is some choice that can be made where somehow superintelligence would be a tool for “personal empowerment” rather than a force focused on “replacing large swaths of society.”

He doesn’t explain how he intends to ensure this, and commentators online have noted that it doesn’t seem like he’s spent much more than a few minutes thinking about this vision. Perhaps “personal superintelligence” is just a marketing gloss on a venture that isn’t any different from those being pursued by other AI companies. Referring to a section of the post where Zuckerberg implies that superintelligence might not amount to much more than smart glasses, Eliezer Yudkowsky comments:

Zuck would like you to be unable to think about superintelligence, and therefore has an incentive to redefine the word as meaning smart glasses.

The idea Yudkowsky is getting at is that because artificial superintelligence — AI vastly smarter than humans — is such a world-shattering and dangerous technology, Zuckerberg would prefer to blur the lines on what the word means, leaving people without the language to oppose its development.

In any case, whether a marketing gimmick or a linguistic offensive, what’s clear is that Zuckerberg is in fact making a huge bet that artificial superintelligence is close within reach. A couple of weeks ago we reported that Meta is building a 5 gigawatt datacenter in Louisiana, with Zuckerberg comparing its size to that of Manhattan.

We’ve also seen reports that he’s offering compensation packages in the hundreds of millions of dollars to poach researchers from other AI companies to work on his new superintelligence project. This week it was revealed that he even made an offer of over a billion dollars (you read that correctly) for a single researcher. The offer was declined!

Zuckerberg’s beliefs about the closeness of superintelligence are in line with those of many experts who expect superintelligence could arrive within the next 5 years.

But those same experts, Nobel Prize winners like Hinton and Hassabis, godfathers of AI, and CEOs of top AI companies, have also warned repeatedly that AI poses an extinction threat to humanity.

Zuckerberg makes some allusion to the danger of AI:

That said, superintelligence will raise novel safety concerns. We'll need to be rigorous about mitigating these risks and careful about what we choose to open source.

Observers noted that this is a sharp about-turn from what he was saying this time last year, when he published his “Open Source AI is the Path Forward” manifesto.

But the important point here is that the big danger, the extinction threat from AI, comes from the development of artificial superintelligence. This is exactly what Zuckerberg and other AI CEOs are racing to develop.

Nobody has any idea how to ensure that smarter-than-human AIs are safe or controllable. As the Future of Life Institute’s independent expert panel recently found, none of these AI companies have anything like a coherent plan for how they'll prevent superintelligence from ending in disaster.

Global AI Cooperation

At the World Artificial Intelligence Conference, Chinese premier Li Qiang proposed the establishment of an international organization to support global cooperation on AI.

Li noted that the risks of AI have caused widespread concern, and said there’s an urgent need to build consensus on how to strike a balance between development and security, adding that technology must remain a tool to be harnessed and controlled by humans.

He also spoke about the potential benefits of AI, and said China wanted to share its experience and products with other countries. He said he wanted China to be more open in sharing open source technology, despite open source, or open weight, frontier AIs currently being impossible to secure from misuse by bad actors.

In the last year or so, beginning with Achenbrenner’s essay Situational Awareness, a dangerous narrative in AI discourse has emerged of the US and China being in a winner-takes-all race to superintelligence, where the winner would have supremacy over the world, and the future. This has notably been supported by AI CEOs such as Sam Altman and Dario Amodei.

The principal danger of such a race is that inevitably one must prioritize rapid development over ensuring that AI systems are safe and controllable — Max Tegmark has called it a “suicide race” — though it could also provoke the use of extreme measures by participants to obstruct each other’s development efforts.

In A Narrow Path, our policy package we developed to ensure the survival and flourishing of humanity, we include the design of an international framework that we believe could avoid such dangerous dynamics.

It doesn’t make sense to abandon efforts to cooperate on the risks of AI before they’ve even been tried.

More AI News

Big tech lobbying against AI regulation

State Senator Andrew Gounardes, who with Alex Bores wrote and passed New York’s RAISE Act earlier this year to regulate AI, wrote on Twitter that tech and venture capital communities are “working overtime” to stop Governor Kathy Hochul from signing the legislation into law.

Gounardes was commenting on Sam Altman’s recent comments that AI biothreat capabilities are getting “quite significant” and that they are “a very big thing coming”.

As we covered a few weeks ago, the RAISE Act proposes to require developers of the most powerful AI systems to write and implement Safety and Security Protocols and publish redacted versions of these. Primarily focused on transparency and incident reporting, the bill also provides the New York Attorney General with the ability to fine companies ($10 million at first, $30 million subsequently) for violations, including where AI developers’ technology causes “critical harm” — when an AI system causes at least 100 deaths or $1 billion in damages.

The bill also bans deploying a frontier model if it would create an unreasonable risk of critical harm, and requires annual safety reviews.

Human-level AI is not inevitable

Garrison Lovely wrote a great article in the Guardian highlighting the extinction threat that AI poses to humanity and calling for a civilization-scale effort to prevent it, explaining how humanity has tackled civilization-scale issues before.

For countless other species, the arrival of humans spelled doom. We weren’t tougher, faster or stronger – just smarter and better coordinated. In many cases, extinction was an accidental byproduct of some other goal we had. A true AGI would amount to creating a new species, which might quickly outsmart or outnumber us. It could see humanity as a minor obstacle, like an anthill in the way of a planned hydroelectric dam, or a resource to exploit, like the billions of animals confined in factory farms.

Altman, along with the heads of the other top AI labs, believes that AI-driven extinction is a real possibility (joining hundreds of leading AI researchers and prominent figures).

Given all this, it’s natural to ask: should we really try to build a technology that may kill us all if it goes wrong?

Alignment Funding

The UK’s AI Security Institute is funding £15 million in alignment research.

Nothing Personell

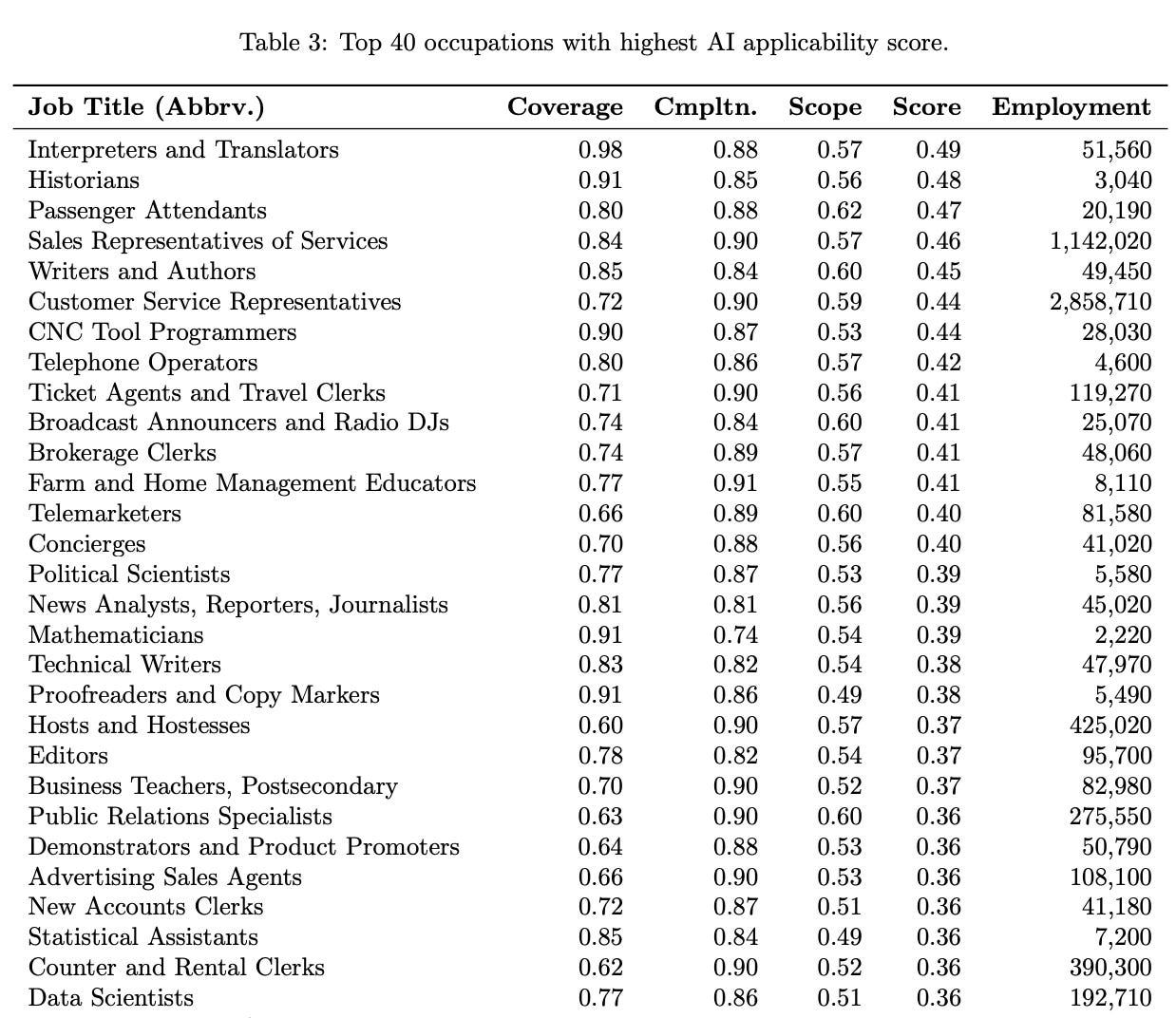

In a new paper, Microsoft revealed a list of jobs most vulnerable to replacement by AI.

The effect of AI on the jobs market is one of several issues that AI CEOs aren’t speaking clearly on. Anthropic CEO Dario Amodei recently said that AI could soon wipe out half of all entry-level white-collar jobs. However, OpenAI CEO Sam Altman recently suggested on Theo Von’s podcast that the job of historians would still exist, though he couldn’t explain how. He’s also been saying that “some” areas of the jobs market will get wiped out, but this seems like an understatement.

The effect of AI on jobs could be tremendous, and already it’s being suggested that AI could be having a significant impact, with UK university graduates currently facing the hardest job market since 2018, and job vacancies plummeting by over a third since the launch of ChatGPT.

One CEO told Gizmodo,

As a CEO myself, I can tell you, I’m extremely excited about it. I’ve laid off employees myself because of AI. AI doesn’t go on strike. It doesn’t ask for a pay raise. These things that you don’t have to deal with as a CEO.

But jobs aren’t even the worst of it. The ability for AI to automate large sections of the workforce is a demonstration of its rapid advance in capabilities towards the end-goal of artificial superintelligence — which could literally wipe us out.

Microsoft also reached a 4 trillion dollar valuation this week.

Datacenters

The Washington Post reports that the AI datacenter buildout is driving up electricity demand, and prices with it. A typical home in some parts of the US has seen its monthly bill rise by as much as $27 per month this summer.

Meanwhile, in Europe, several “gigawatt factories” are being planned, and OpenAI’s Stargate AI infrastructure project is expanding to Norway.

EU code of practice

Google has said it’ll sign the EU’s AI code of practice, while xAI said it would be signing certain parts of it. Meta has refused to sign.

The code of practice is a voluntary framework designed to help companies ensure they are compliant with the EU’s AI rules. It requires AI developers to “regularly update documentation about their AI tools and services and bans developers from training AI on pirated content”.

Take Action

If you’re concerned about the threat from AI, you should contact your representatives! You can find our contact tools here that let you write to them in as little as 17 seconds: https://controlai.com/take-action

Thank you for reading our newsletter!

Tolga Bilge, Andrea Miotti