The Campaign

Keep Humanity in Control

Welcome to the ControlAI Newsletter! This week we’re bringing you an important update on our work and the work of others fighting to keep humanity in control.

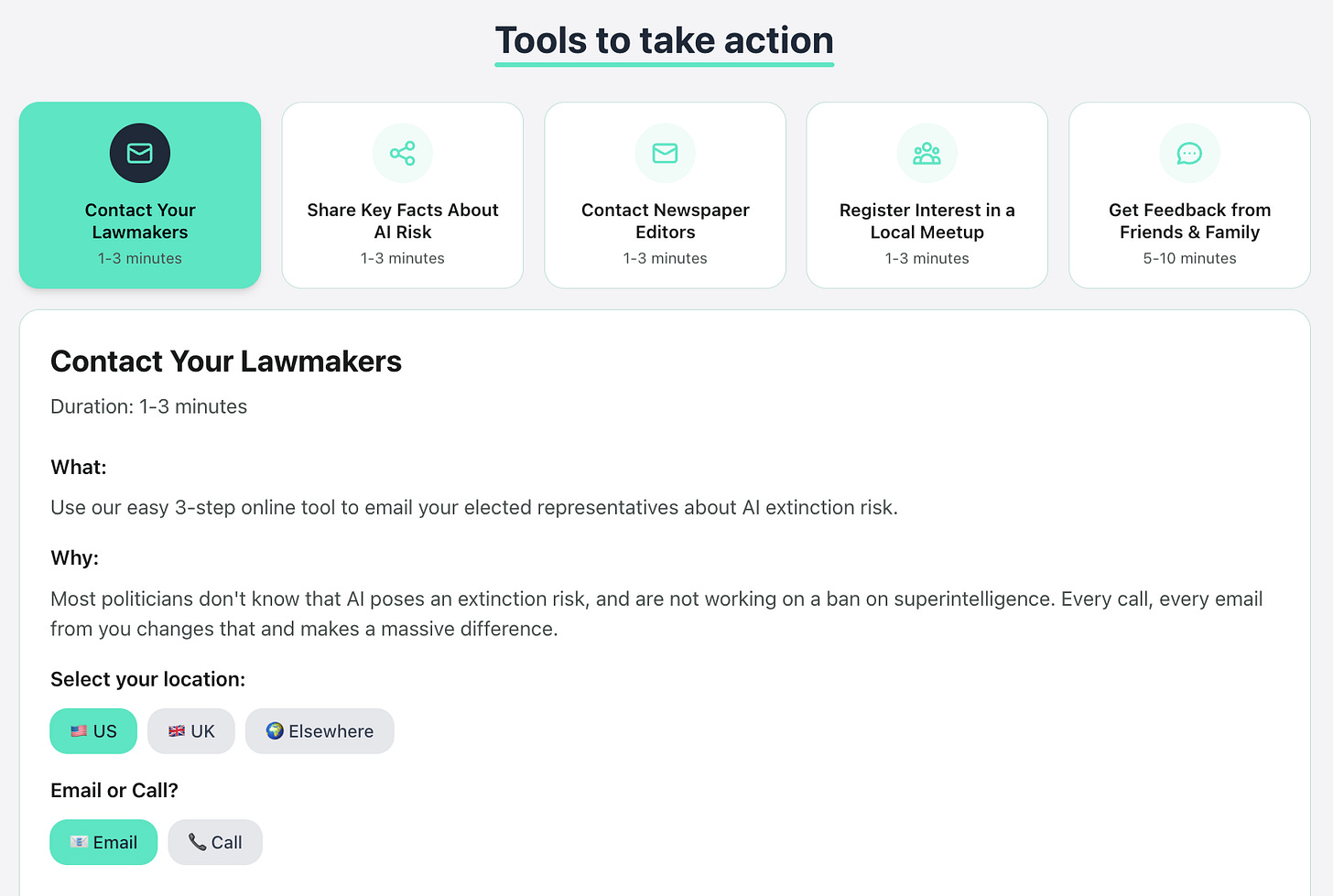

If you find this article useful, we encourage you to share it with your friends! If you’re concerned about the threat posed by AI and want to do something about it, we also invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

Our New Campaign

On Friday, we launched a new campaign (https://campaign.controlai.com), creating a central hub where concerned citizens, civil society organizations, and public figures can learn about the extinction risk posed by AI and join the call to prohibit the development of artificial superintelligence.

We have support from top experts spanning a range of fields, including Yuval Noah Harari, former OpenAI Dangerous Capability Evaluations Lead Steven Adler, and the former UK Defence Secretary The Rt Hon. the Lord Browne of Ladyton.

The premise of the campaign is simple. We provide the public the information to learn about the problem, and tools to enable citizens to make a difference.

Nobel laureates, hundreds of top experts, and even the CEOs of the top AI companies are warning that AI poses a risk of extinction to humanity.

In 2023, they jointly stated that “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

The risk comes from the development of artificial superintelligence — AI vastly smarter than humans. When AI godfather and Nobel Prize winner Geoffrey Hinton speaks publicly he scarcely a talk goes by without mentioning this. He says his main mission is to warn people about how dangerous AI could be.

It’s worth underscoring that when these experts say extinction, they’re not using a metaphor. They really do mean that everyone, everywhere on Earth, could die from the consequences of artificial superintelligence.

Despite these warnings, and despite even the CEOs of the top AI companies acknowledging the risk, AI companies such as OpenAI, Anthropic, Google DeepMind, xAI, and Meta are explicitly aiming to build superintelligence. And they’re in a dangerous race against each other to do it as fast as possible.

You might be wondering: how and why could superintelligence wipe us out? You should check out our recent article on this!

What’s particularly worrying is that many experts believe that this technology could be developed in just the next five years, including those working on actually developing it.

We can solve this problem. By prohibiting the development of superintelligence, we can prevent this risk.

However, the public, civil society, and political leadership have limited awareness of the seriousness of this matter, and even for those with awareness there haven’t been clear ways for them to make a difference.

That’s what we’re changing with our new campaign. Here, we’re providing the home base for citizens to learn about the problem, while providing easy-to-use civic engagement tools to help you effect positive change!

Together we can solve this problem.

Check out our site and join the campaign to take back control of our future!

https://campaign.controlai.com

We also have a beautiful video produced for our campaign you can watch!

If Anyone Builds It, Everyone Dies

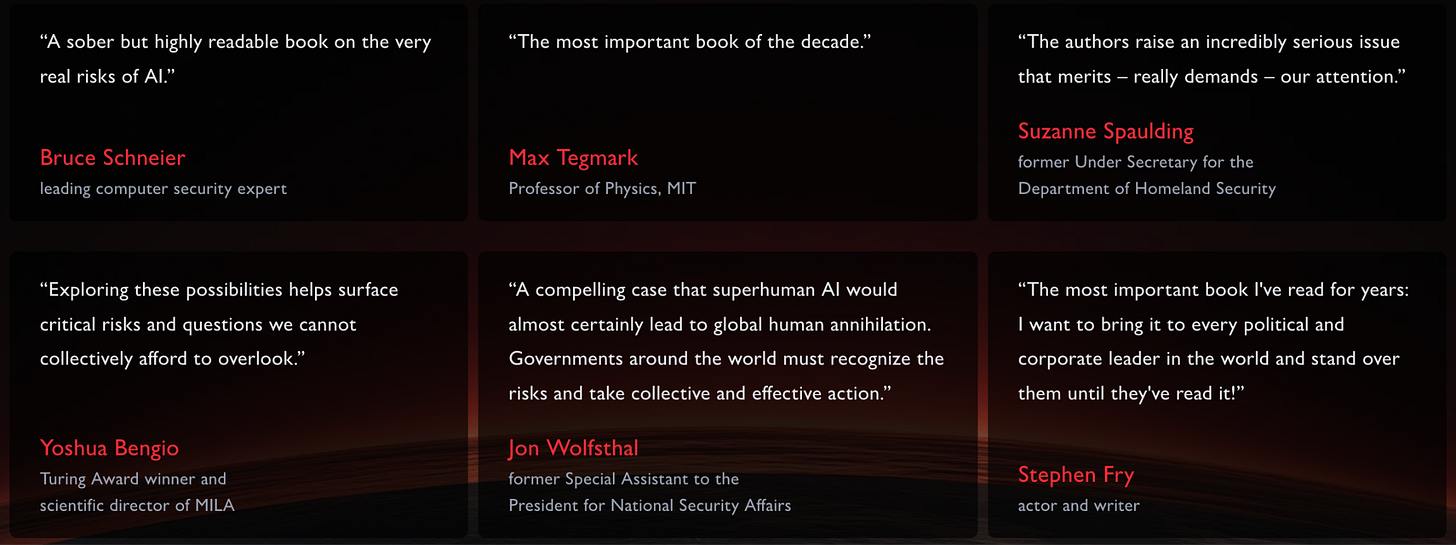

On Tuesday, the much-anticipated book by top AI experts Eliezer Yudkowsky and Nate Soares was released!

“If Anyone Builds It, Everyone Dies” clearly explains what is known by insiders, but should become common knowledge. The development of superintelligence threatens us with extinction.

The book has received a series of positive reviews from leading experts in AI and beyond. These reviewers include Emmett Shear, who was briefly the interim CEO of OpenAI, former Fed Chairman Ben Bernanke, and former AAAI President Bart Selman.

Selman says that the book is "essential reading for policymakers, journalists, researchers, and the general public."

In Tom Whipple’s review in The Times, he noted that

What gives me pause is that, very unusually, even those who advocate for and build AI accept there is a decent risk — in the double digit percentages — that it will be a catastrophe. Yet they go ahead.

Jeffrey Ladish, the Executive Director of Palisade Research — the researchers who found that OpenAI’s o3 AI will sabotage attempts to shut it down even when instructed not to — commented that he doesn’t think Yudkowsky and Soares have an extreme view.

If we build superintelligence with anything remotely like our current level of understanding, the idea that we retain control or steer the outcome is AT LEAST as wild as the idea that we'll lose control by default

Eliezer Yudkowsky has been warning of the danger of superintelligence for decades. It’s great to see the reasons why we should be concerned about this problem made publicly accessible. Yudkowsky’s and Soares’ prescription is clear: to avoid extinction, we must not build superintelligence.

You can get the book here!

https://ifanyonebuildsit.com

More AI News

The UK AI Bill

The UK government still hasn’t delivered on its promised AI Bill.

Saskia Koopman, writing in City A.M., points out that ministers promised robust, binding regulation on the most advanced systems. But a year into Labour's government, there's still no AI Bill in sight.

Every delay puts us at risk. AI companies are rushing to build artificial superintelligence — AI vastly smarter than humans — despite warnings about the risk from countless experts.

Polling by YouGov shows overwhelming public support for regulation, with huge majorities supporting the establishment of a national AI regulator and audits of powerful systems. We can't delay any more. The UK needs an AI Bill.

Dario Amodei

At Axios’ AI summit, Dario Amodei — the CEO of one of the largest AI companies, Anthropic — said that he thinks there’s a “25% chance that things go really, really badly” when asked for his “p(doom)” estimate. A “p(doom)” estimate is an estimate about the chances that AI results in human extinction or an equivalently terrible outcome.

When those actively working to build superintelligence say that they believe the chances of it going wrong are so high, we should listen. Many experts are far more pessimistic than Amodei.

Take Action!

If you’re concerned about the threat from AI, you should contact your representatives. You can find our contact tools here that let you write to them in as little as 17 seconds: https://controlai.com/take-action.

You should also head over to our campaign site: https://campaign.controlai.com for more ways you can help!

We also have a Discord you can join if you want to help humanity stay in control, and sharing this article with friends is also helpful!

Thank you for putting this up. It's of the utmost importance for the whole of humanity.

What is wrong with this world that we think that computers or AI will replace humanity?? This is just like the tower of Babel, when you think that you are smarter than God. For one thing, computers have no soul.