The Approaching Horizon

AI time horizons, bioweapons development, slipping safety standards, and more

This week we're updating you on some of the latest developments in AI and what it means for you. If you'd like to continue the conversation join our Discord!

Table of Contents

AI time horizons

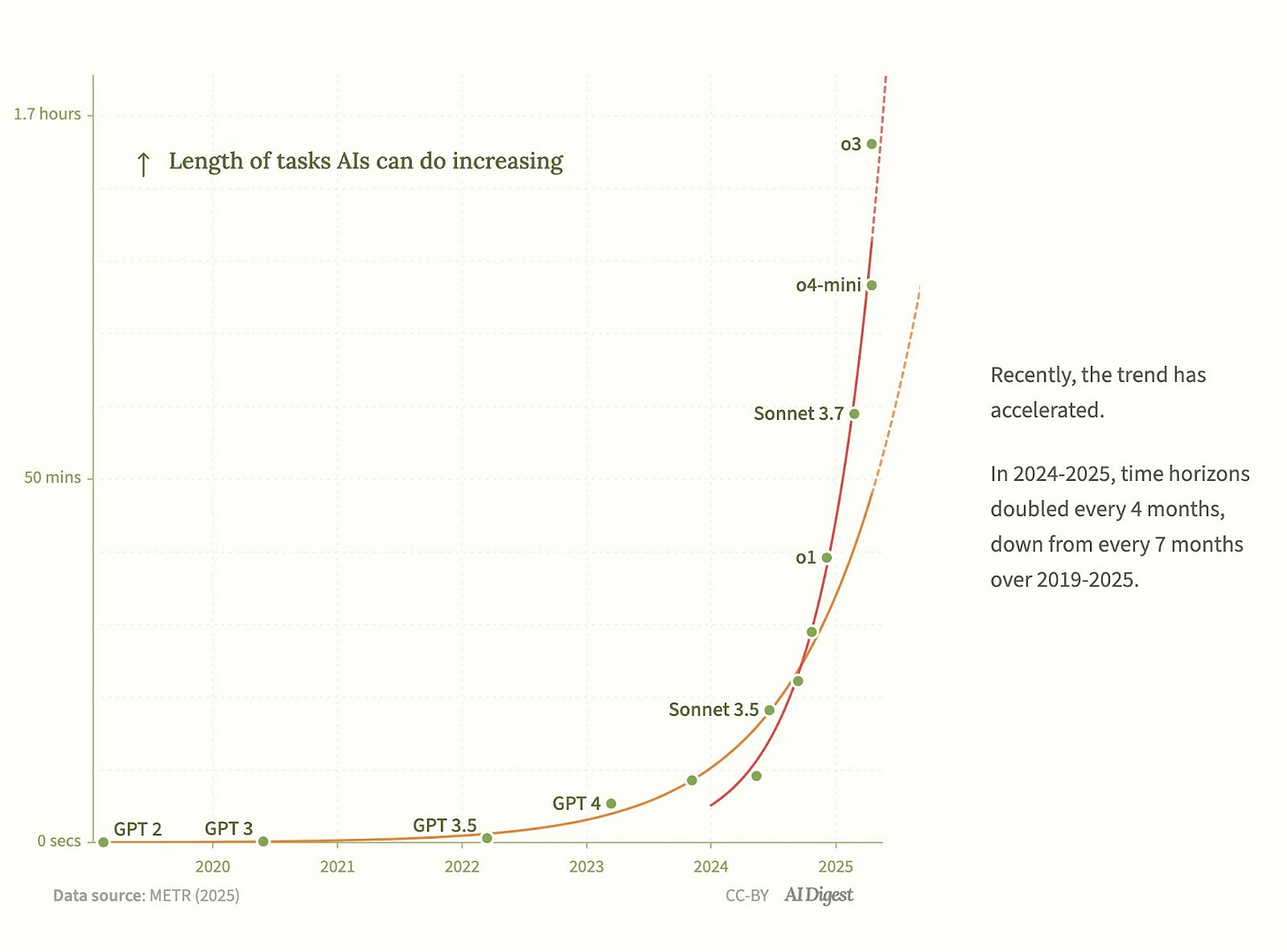

Last month, we covered a recent METR paper which found that the length of tasks that AI agents can complete has been consistently doubling every 7 months for the last 6 years.

Since then, OpenAI’s new models have been tested, o3 and o4-mini. It turns out that the doubling time isn’t 7 months anymore. It’s 4 months.

That means that if AIs can do tasks that expert humans take an hour to do today, in 4 months they’d be able to do tasks that take 2 hours, and 4 months later 4 hours, and so on.

The previous rate of doubling every 7 months was already a very concerning trend that served as solid evidence that superintelligence could arrive in the near future.

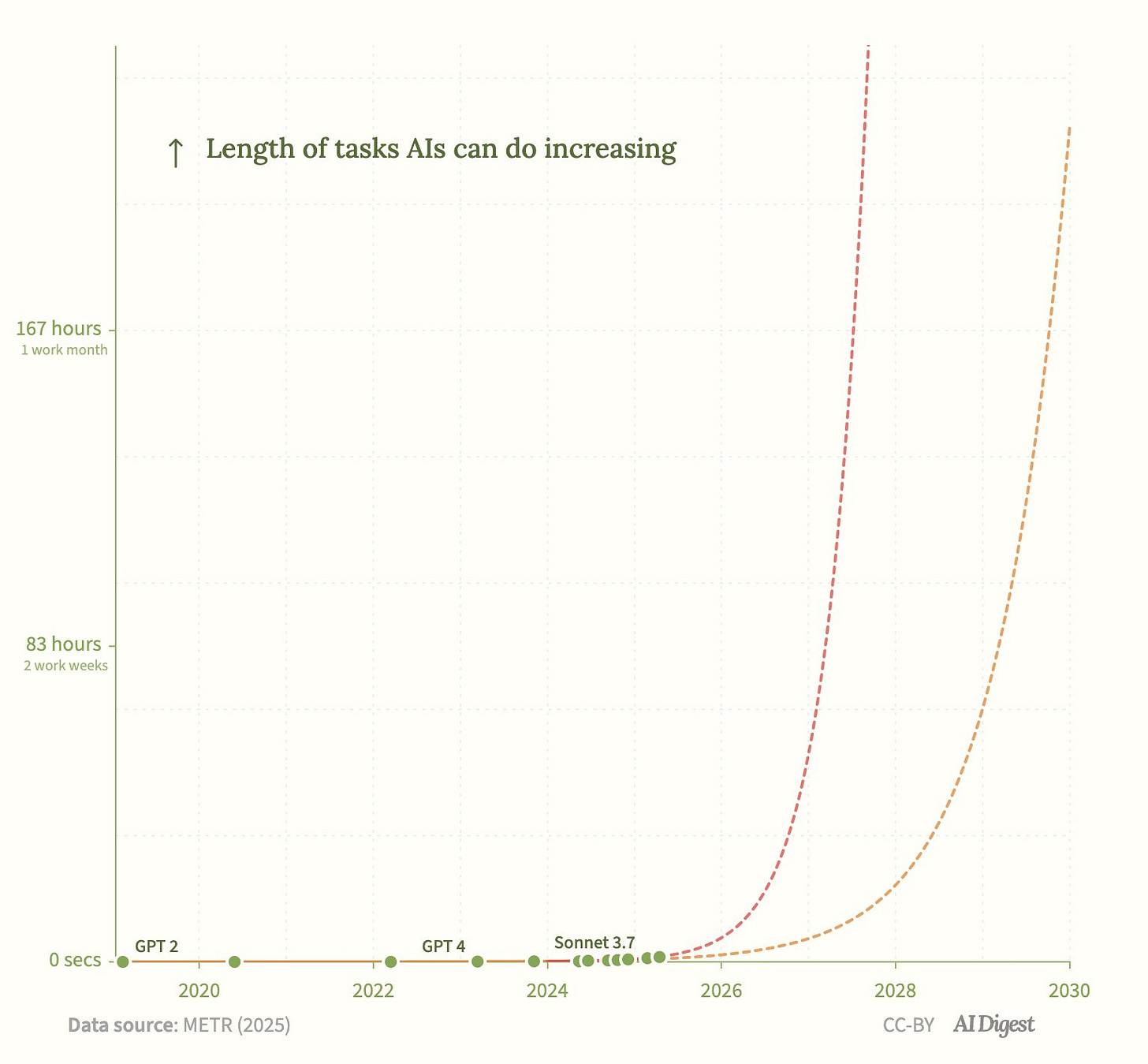

This change is even more concerning, and implies a projected date when AIs can do month-long tasks being moved forward by years.

How long it takes a skilled human to perform a task seems like a useful way of gauging the difficulty of a problem. If we define intelligence as the ability to solve problems (a common definition), then measuring task horizons could be a useful proxy for the measurement of intelligence.

It’s clear that AI systems are rapidly becoming more capable, with their capabilities as measured by this method growing on an exponential. Yet nobody knows how to ensure that superhuman AI systems are safe or controllable.

Exponentials are notoriously difficult to react to. What seems small and insignificant one day can rapidly grow to become overwhelming shortly afterwards.

That’s why we need proactive regulation on the development of the most powerful AI systems, before they get to the level where they pose a risk of extinction to humanity.

AI-assisted bioweapons development

One concern that many in the field of AI safety have had is that at some point AI systems will become capable enough to assist with bioweapons development.

This week, new research was published that investigated this matter. Researchers developed a new test called the Virology Capabilities Test (VCT), which aims to measure the capability to troubleshoot complex virology lab protocols.

The paper found that expert virologists with access to the internet score an average of 22.1% on questions in their sub-areas of expertise. Concerningly, OpenAI’s o3 model scores much higher, reaching 43.8% accuracy, and beating 94% of expert virologists even within their sub-areas of expertise.

The authors point out that the ability to perform well on these tasks is inherently dual-use. It’s useful for beneficial research, but could also help with malicious uses, like developing bioweapons.

The likelihood that AI systems will soon be able to be misused to significantly assist with the development of bioweapons, or indeed to conduct complex and large-scale cyberattacks, poses a particular problem for the open-weighting of AI systems: where the model weights of AIs are released to the public.

When only providing API access, it is possible to have some safety mitigations that could prevent this type of misuse — though ways to bypass these are routinely found, and API access could be checked and restricted for dangerous uses. When releasing the model weights, all bets are off, as these safety mitigations can currently be trivially removed.

OpenAI watering down safety commitments

OpenAI has been silently watering down their safety commitments. Former OpenAI safety researcher Steven Adler made the observation that OpenAI made a significant change to their Preparedness Framework, and neglected to include it in their list of changes.

The change is that they’re no longer requiring safety tests of finetuned versions of their AI models. That means people could potentially finetune their AIs for malicious purposes, without them having to be safety tested.

Here’s the original version:

And the current version, which says they only need testing if the model weights are to be released:

Opposition to OpenAI’s conversion to a for-profit

Pushback against OpenAI’s attempt to convert into a for-profit company is continuing to mount, with a significant open letter addressed to California’s and Delaware’s Attorneys General Bonta and Jennings.

The letter, which was signed by top legal and AI experts, and 9 former OpenAI employees, makes two key calls:

Demand answers to fundamental questions. OpenAI has not publicly explained how its proposed restructuring will advance the nonprofit’s charitable purpose of safely developing AGI for the benefit of humanity. Nor has it provided adequate explanations for why the governance safeguards that Mr. Altman testified to Congress were important to OpenAI’s mission as recently as 2023 became obstacles to its mission in 2024.

Protect the charitable trust and purpose by ensuring the nonprofit retains control. We request that you stop the restructuring and protect the governance safeguards—including nonprofit control—that OpenAI leadership have insisted are important to “ensure[ ] it remains focused on [its] long-term mission.”

It also provides detailed explanations for why the conversion should not be allowed to take place.

This comes just a couple of weeks after 12 former OpenAI employees filed an amicus brief on Elon Musk’s lawsuit against OpenAI to block them from converting to a for-profit company.

Thanks for reading our newsletter. Are you concerned about the threat from AI? Let your elected representatives know!

We have tools that make it super quick and easy to contact your lawmakers. It takes less than a minute to do so.

If you live in the US, you can use our tool to contact to your senator here: https://controlai.com/take-action/usa

If you live in the UK, you can use our tool to contact your MP here: https://controlai.com/take-action/uk

Join 800+ citizens who have already taken action!