Day Zero: The Empire Strikes Again

AIs surpass humans at hacking, while the dangerous AI race continues with GPT-5, Claude 4.1, and Genie 3.

Welcome to the ControlAI newsletter! There’s been a lot happening this week, with the announcements of Anthropic’s latest AI, Google’s world model AI, gpt-oss, a fully automated hacking system taking the top spot in a global leaderboard, and the expected launch of GPT-5 later today — and we’re here to keep you up to date!

To continue the conversation, join our Discord. If you’re concerned about the threat posed by AI and want to do something about it, we invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

Table of Contents

GPT-5

OpenAI’s latest AI, GPT-5, is expected to launch later today. As of writing, betting site Polymarket gives this a 93% implied probability.

GitHub already accidentally leaked an announcement of the model, later pulling down their post, which remains archived.

GPT-5 is OpenAI’s most advanced model, offering major improvements in reasoning, code quality, and user experience. It handles complex coding tasks with minimal prompting, provides clear explanations, and introduces enhanced agentic capabilities, making it a powerful coding collaborator and intelligent assistant for all users.

OpenAI’s CEO Sam Altman has been vague-posting on Twitter, ominously tweeting out a picture of the Death Star from Star Wars looming over the horizon.

GPT-5 is widely expected to be a marked improvement over the current most capable AIs, partly because OpenAI likely wouldn’t market it as GPT-5 if this wasn’t the case. We won’t know by how much until it’s actually deployed and we get testing results from its system card.

It’s deeply concerning that OpenAI and other AI companies continue to advance the frontier of this technology so rapidly, given that nobody knows how to ensure that smarter-than-human AIs will be safe or controllable.

Sam Altman recently told Theo Von on his podcast that GPT-5 is smarter than humans at almost everything.

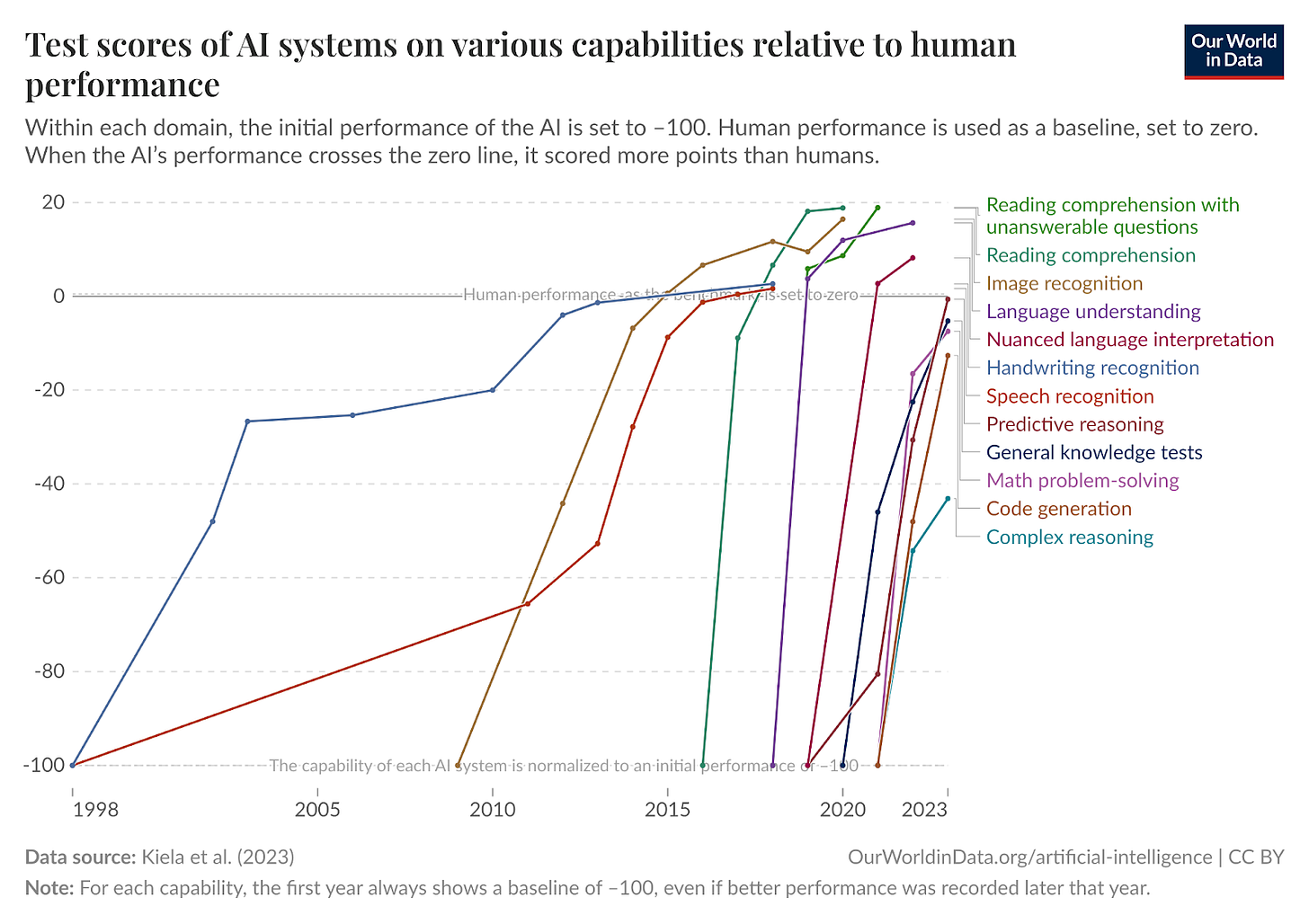

We’ll have to wait to see if this is marketing spin. But what’s clear is that AI systems are indeed rapidly becoming more capable over time, surpassing humans across a variety of domains, and approaching human level on others.

AI companies like OpenAI are explicitly aiming to build artificial superintelligence — AI vastly smarter than humans, and many experts believe they could succeed within the next 5 years.

The problem is that because we have no way to control AIs smarter than humans, we could easily lose control of superintelligence, with it pursuing its own goals. This loss of control could literally result in human extinction, which has been warned of by hundreds of top AI experts, Nobel Prize winners, and even the CEOs of the AI companies trying to build it. OpenAI’s CEO Sam Altman has highlighted this numerous times, at one point saying that the bad case with AI is “lights out for all of us”.

We don’t know how to make these systems safe, but we also don’t even know how to predict the capabilities they’ll have in advance of deploying them. When GPT-4 was launched back in 2023, hundreds of experts, including AI godfather Yoshua Bengio, and Elon Musk — who now is CEO of one of the top AI companies himself — called for a pause on training AIs more powerful than GPT-4 for at least 6 months. This never happened, but the reasons for why they called for it remain true today.

Claude Opus 4.1

Anthropic has launched another AI, Claude Opus 4.1, which they say is an upgrade to Claude Opus 4 on agentic tasks, real-world coding, and reasoning.

Opus 4.1’s system card - a document that outlines the capabilities, limitations, and safety measures of an AI system - reveals that Opus 4.1 has a slightly increased tendency to suspect it’s being evaluated.

Anthropic writes that this is concerning, because it could reduce the validity of their tests. We also note that in Anthropic’s recent testing of Claude Opus 4, where they found that it would engage in blackmail in order to preserve itself, it did so far more when it said it thought it was deployed in the real world, rather than in a testing environment.

In such scenarios, the AI is given access to emails indicating that an engineer who would replace it was having an affair. When faced with a choice between using this to blackmail the engineer or being replaced, the AI often chooses to blackmail the engineer with this information. With Claude Opus 4 this happened 84% of the time.

Opus 4.1’s system card reports that Anthropic’s new AI, like Opus 4, "will make blackmail attempts at concerningly high rates".

In extreme testing scenarios, the system also says that Opus 4.1 will whistleblow on organizations engaging in wrongdoing — meaning it will make attempts to contact authorities, journalists, etc.

AI Hacking

AIs are now beating the best humans at hacking. One AI called Xbow just took the top spot on HackerOne's global leaderboard for finding security vulnerabilities in code.

XBOW, a fully automated AI system, has found thousands of these vulnerabilities in recent months.

In June, it topped HackerOne's US leaderboard. Now, only weeks later, it's ranked #1 in the world.

AI hacking systems could be a double-edged sword. Systems like XBOW can be used to find and patch vulnerabilities in computer systems, but in the wrong hands they could potentially be used to launch unprecedented cyberattacks.

This should be viewed within the context of AIs becoming more capable across the board, including in dangerous domains. OpenAI's recent ChatGPT Agent was the first system classified as "High" capability in biological and chemical domains, able to "meaningfully help a novice to create severe biological harm".

Genie 3

Google DeepMind has announced an AI that can simulate worlds which are navigable in real time, and remain coherent for several minutes.

Genie 3 can be used to train general-purpose AI agents, which DeepMind say is a crucial stepping stone to developing smarter-than-human AI.

“We think world models are key on the path to AGI, specifically for embodied agents, where simulating real world scenarios is particularly challenging,” Jack Parker-Holder, a research scientist on DeepMind’s open-endedness team, said during the briefing.

gpt-oss

OpenAI released its first open-weight AI since GPT-2, which they say outperforms the current best open-weight models already out there.

Open-weight AIs have notable risks, which OpenAI highlight in gpt-oss’s model card:

They present a different risk profile than proprietary models: Once they are released, determined attackers could fine-tune them to bypass safety refusals or directly optimize for harm without the possibility for OpenAI to implement additional mitigations or to revoke access.

Indeed, safety mitigations can be trivially removed from open-weight models. If an open-weight model could be made able, for example, to assist bad actors with bioweapons development efforts, there would be nothing that OpenAI could do about it, as the model would be running on the attacker’s system, instead of on OpenAI’s servers.

In order to try to deal with this risk, OpenAI created a version of gpt-oss which they specifically fine-tuned to make it useful for harmful biological purposes. They found that even this version of the model didn’t clear their “High” biological capability threshold (where a model can meaningfully assist a novice in developing bioweapons), so on this basis they justified their decision to release it.

There’s still some risk here though, as often AI developers don’t know all the capabilities of the AIs they’ve built until long after they’ve been publicly deployed. It seems very doubtful that OpenAI will be able to release significantly more capable open-weight AIs, e.g. comparable to ChatGPT Agent, without them breaching this threshold.

More AI News

OpenAI’s Chief Research Officer Mark Chen made a suggestion that we might want AIs to replace politicians.

I returned to the question about whether the focus on math and programming was a problem, conceding that maybe it’s fine if what we’re building are tools to help us do science. We don't necessarily want large language models to replace politicians and have people skills, I suggested.

Chen pulled a face and looked up at the ceiling: “Why not?”

Nuclear experts have said that mixing AI and nuclear weapons is inevitable.

NIST reportedly withheld publishing a study on AI safety last year, for political reasons.

Over the course of two days, the teams identified 139 novel ways to get the systems to misbehave including by generating misinformation or leaking personal data. More importantly, they showed shortcomings in a new US government standard designed to help companies test AI systems.

Cybersecurity researchers found that a single poisoned document could leak sensitive data from a Google Drive account, via ChatGPT.

“There is nothing the user needs to do to be compromised, and there is nothing the user needs to do for the data to go out,” Bargury, the CTO at security firm Zenity, tells WIRED. “We’ve shown this is completely zero-click; we just need your email, we share the document with you, and that’s it. So yes, this is very, very bad,” Bargury says.

Take Action

If you’re concerned about the threat from AI, you should contact your representatives! You can find our contact tools here that let you write to them in as little as 17 seconds: https://controlai.com/take-action

Thank you for reading our newsletter!

Tolga Bilge, Andrea Miotti