85+ UK Politicians Support Binding AI Regulation

UK lawmakers acknowledge the AI extinction threat and call for binding regulation on the most powerful AI systems.

AI experts are continually warning that the development of superintelligence, AI vastly smarter than humans, poses a risk of human extinction. This is a grave problem, but it is not without a solution.

This week we’re bringing you an important update on the progress of ControlAI’s UK campaign to prevent this threat, along with news on other developments in AI.

Table of Contents

If you find this article useful, we encourage you to share it with your friends! If you’re concerned about the threat posed by AI and want to do something about it, we also invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

And if you have 5 minutes per week to spend on helping make a difference, we encourage you to sign up to our Microcommit project! Once per week we’ll send you a small number of easy tasks you can do to help. You don’t even have to do the tasks, just acknowledging them makes you part of the team.

Our UK Campaign Is Growing Rapidly

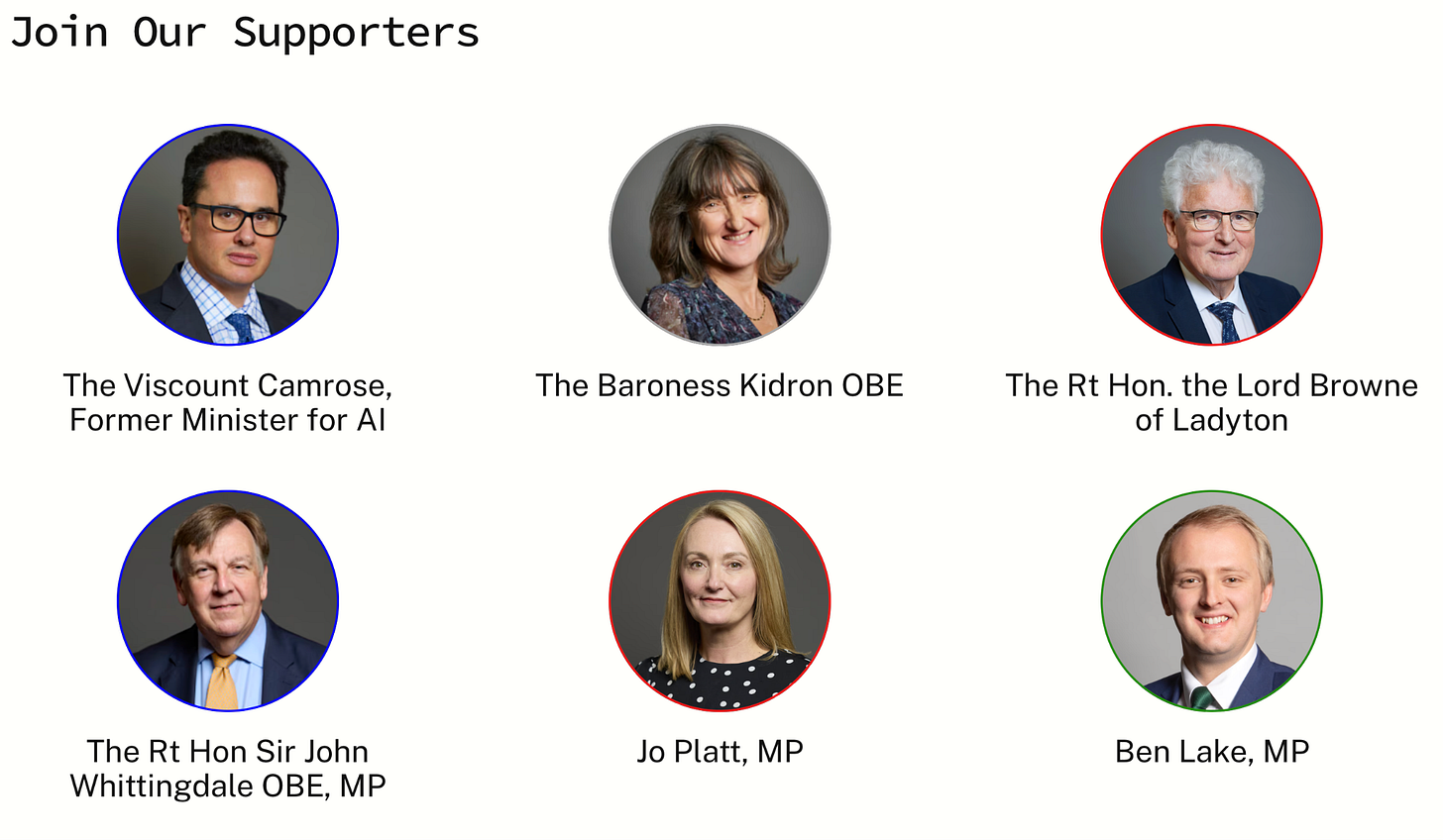

In recent months, our UK campaign for binding regulation on the most powerful AI systems has been ramping up in a big way. Since our last major update provided in this newsletter, the number of politicians who’ve backed our campaign statement has more than doubled from 37 to over 85!

Our campaign statement is short and simple, and reads:

Nobel Prize winners, AI scientists, and CEOs of leading AI companies have stated that mitigating the risk of extinction from AI should be a global priority.

Specialised AIs - such as those advancing science and medicine - boost growth, innovation, and public services. Superintelligent AI systems would compromise national and global security.

The UK can secure the benefits and mitigate the risks of AI by delivering on its promise to introduce binding regulation on the most powerful AI systems.

This statement does three key things:

It acknowledges the extinction threat posed by AI, which a vast array of experts and industry leaders have warned needs to be addressed.

It identifies superintelligent AI, or superintelligence, as a threat to national and global security. The development of superintelligence is where the AI extinction threat comes from. Other specialised AIs can be used beneficially.

It is a clear call for binding regulation on the most powerful AI systems to mitigate the risks of AI.

Politicians supporting it immediately create common knowledge that there are many others who take this risk seriously and want to address it. When there is common knowledge about the need to address a problem, it makes tackling it much easier, as nobody feels like they are alone — they are not.

Assembling this coalition is a particularly significant moment, as it’s the first time, anywhere in the world, that such a coalition of lawmakers is taking a stand on this profound security crisis.

Supporters include Viscount Camrose, the former Minister for AI, Lord Browne of Ladyton, the former Defence Minister, Baroness Kidron OBE, and Sir John Whittingdale OBE, MP, the former Minister of State for the Department of Science, Innovation and Technology.

When we started this campaign, people in the policy field told us it would be impossible to get even one politician to publicly acknowledge the extinction threat of AI. We’ve shown that to be completely wrong.

This cross-party coalition was not built by complex political manoeuvring, but by directly and systematically contacting, meeting, and informing lawmakers almost every day about the threat from superintelligent AI and the policy solutions. Members of our team have now given over 120 briefings to lawmakers.

Just a couple of weeks ago, a tremendous coalition of experts and leaders called for the development of superintelligence to be prohibited, a call that we’re proud to have provided early support for.

Despite this broad agreement to prohibit superintelligence, top AI companies are racing to build it. Just last week, OpenAI’s Chief Scientist said it could be built within a decade. There is almost no regulation holding these companies to adequate safety measures.

Our first-of-its-kind coalition of lawmakers calling for regulation builds a firm base of support for legislative actions to protect humanity. ControlAI’s been working directly on this too. Earlier this year, members of our team presented an AI bill we developed to prevent superintelligence and monitor and restrict its precursors at the UK Prime Minister’s Office!

Superintelligence threatens all of us, and everyone has a stake here. One thing we’ve always wanted to do is to enable citizens to make a difference. On our campaign site, we provide civic engagement tools and resources that allow you to do this in mere seconds.

Your voice really makes a difference. Using our tools, citizens have sent over 80,000 messages to US and UK lawmakers, with over 10 UK lawmakers joining our campaign as a result!

Despite experts’ warnings that AI poses an extinction risk, we should remember that there’s still no legislative action to address this threat. Labour promised regulation on the most powerful AIs, and now it’s time to deliver.

Help us make this happen by using our tools to get in touch with your representatives here!

https://campaign.controlai.com/take-action

A Dive Into AI Safety Tests

A new article by Robert Booth in The Guardian reports that hundreds of AI safety and effectiveness tests have been found to be weak and flawed.

UK AI Security Institute scientists and others checked over 440 benchmarks, finding issues that undermine their validity. Booth highlights that this comes after reports of real-world damage associated with AIs.

We thought it would be helpful here to provide some context on AI safety tests. In addition to the methodological flaws the recent study found, there is a deeper issue which makes them difficult to rely on to ensure safety.

Modern AIs aren’t like normal computer programs. Unlike normal code, AIs are grown like creatures. Billions of numbers are dialed up and down by a simple algorithm, processing tremendous amounts of data. From this process, emerges a form of intelligence. Nobody really knows how to interpret what these numbers mean. People are working on it, but research is at an early stage.

These AIs can learn things, like goals and behaviors, including ones we don’t want. Importantly, we don’t have any way to reliably specify these, or even check them.

Researchers can run tests on AIs after they’ve been trained and demonstrate that a particular behavior exists if the AI exhibits it in tests. But they have no way to prove that the AI won’t do something we don’t want it to do.

This can be because their tests were lacking and they simply failed to elicit a behavior. We’ve seen many cases where researchers find out months later that an AI was capable of doing something they didn’t realize it could do.

More concerning, it could also happen if an AI realizes it is being tested and conceals how capable it is. The most advanced AIs today show significant awareness that they’re being tested and do exhibit lower rates of malicious behavior when they say they believe they’re being tested.

As ever more powerful AIs are developed and AI companies race to build superintelligence, this only becomes more concerning, as nobody knows how to ensure that smarter-than-human AIs won’t turn against us.

Weekly Digest

Modeling the geopolitics of AI development

How would continued rapid AI development shape geopolitics if there’s no international coordination to prevent dangerous AI development?

There’s a new paper out which models this. Without international coordination, there is no safe path.

King Charles warns of AI dangers

Nvidia’s CEO Jensen Huang has said that King Charles recently provided him with a copy of a speech The King gave in 2023, warning about the risks of AI. In the speech, King Charles said that the risks of AI need to be tackled with a “sense of urgency, unity and collective strength”.

We’ve Lost Control of AI

In a new video that we partnered with SciShow on, science communicator Hank Green explains the concerning trends we see in AI.

SciShow has over 8 million subscribers, so it’s great to see so many people learn about this problem! With over 1.5 million views in less than a week, it’s already SciShow’s top video this year (out of more than 200 videos) by number of likes and comments. We hope you’ll find it interesting.

Take Action

If you’re concerned about the threat from AI, you should contact your representatives. You can find our contact tools here that let you write to them in as little as 17 seconds: https://campaign.controlai.com/take-action.

We also have a Discord you can join if you want to connect with others working on helping keep humanity in control, and we always appreciate any shares or comments — it really helps!

The problem here in the US is the chaos Trump creates. Every time we turn around something else horrible is happening and people make calls to reps, go online, talk and do petitions, and read news for updates. To get Congress to pay attention to this threat would require Trump to care. And since he is in bed with AI owners, creators, producers the odds of getting anyone to pay attention is close to nil. We're already fighting so many battles he has created - weapons to Israel, which was on a genocidal path, providing weapons that are enabling the Sudan genocide, alienating our allies, creating global economic strain with his tariffs war which jacks up the cost of living, killing our climate change programs, trying to eliminate our Endangered Species program, taking away food and medical care for millions of people, militarizing our cities, punishing Democratic states by cutting the funding they are entitled to, taking revenge with expensive investigations into people who piss him off, destroying a part of our most significant national building without agreement from anyone and lying throughout, and assaulting, kidnapping and imprisoning people right off the streets or breaking into buildings. I'm sure in the face of the above you can understand why this isn't going to get more attention here. We see the danger but we also see danger all around us threatening our way of life. The faster we get this corrupt, immoral government out of office, the faster we will pile into the fight to keep AI under control. I just hope it will be in time.

In the US regulation will depend on the party in office and the size of the bribes.