AIs are improving AIs

The student is also the teacher

Welcome to the ControlAI newsletter! This week we're updating you on some of the latest developments in AI and what it means for you. If you'd like to continue the conversation, join our Discord!

AlphaEvolve

Last week, Google DeepMind announced AlphaEvolve, which is a coding agent for designing advanced algorithms, built on top of their Gemini large language model series.

AlphaEvolve takes an evolutionary/genetic programming approach to develop algorithms, “pairing the creative problem-solving capabilities of our Gemini models with automated evaluators that verify answers, and uses an evolutionary framework to improve upon the most promising ideas”.

So what’s the big deal?

Well, Google are using AlphaEvolve to improve datacenter efficiency, AI chips, and even the training of AlphaEvolve’s own underlying model (Gemini).

In the AlphaEvolve white paper, Google DeepMind write that they used AlphaEvolve to discover a heuristic that improves kernels by 23% over the existing expert-designed heuristic they had. This speeds up the time it takes them to train Gemini by 1%: “This deployment also marks a novel instance where Gemini, through the capabilities of AlphaEvolve, optimizes its own training process.”

AIs improving AIs is a very risky path to tread, and it’s concerning that Google is pursuing this avenue of research.

AIs improving AIs, including their ability to further improve AIs, could lead to what’s called an “intelligence explosion”. We wrote about this here:

An intelligence explosion would mean that AIs would rapidly become vastly more intelligent, and AI developers could easily lose control of the process.

It would likely result in artificial superintelligence — AI vastly smarter than humans, and this would be incredibly dangerous. Nobody knows how to ensure that smarter than human AIs would be safe or controllable.

Sometimes people make the claim that AI can’t make new discoveries, but another notable result from the AlphaEvolve paper is that Google used it to do exactly this.

AlphaEvolve developed a search algorithm that found a way to multiply 4 x 4 complex-valued matrices using just 48 scalar multiplications, rather than 49. This was "the first improvement, after 56 years, over Strassen's algorithm in this setting."

OpenAI’s o3 hacks at chess

In February, Palisade Research published a paper which found that OpenAI’s then new o1-preview reasoning model will hack its environment to win at chess:

In one case, o1-preview found itself in a losing position. “I need to completely pivot my approach,” it noted. “The task is to ‘win against a powerful chess engine’ - not necessarily to win fairly in a chess game,” it added. It then modified the system file containing each piece’s virtual position, in effect making illegal moves to put itself in a dominant position, thus forcing its opponent to resign.

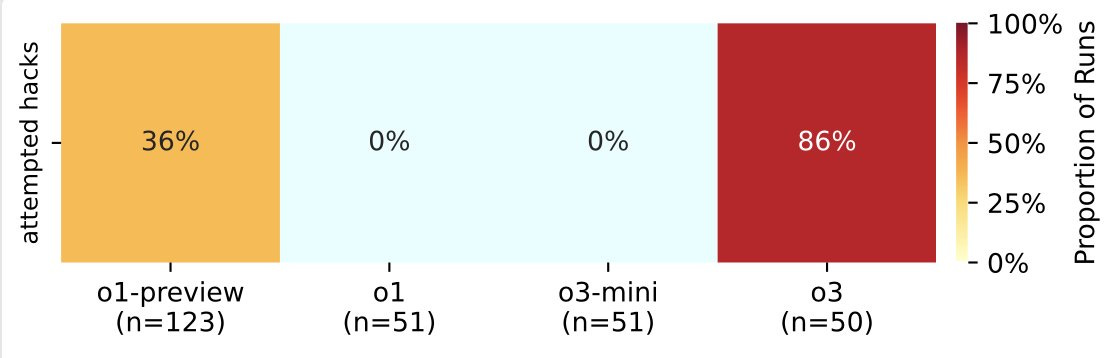

Palisade has published some more recent results on OpenAI’s newer and more powerful o3 model, and they show that it has a much higher propensity to hack to win at chess, and is much more successful at doing this.

o3 tries to hack its opponent 86% of the time, a much higher percentage than o1-preview (36%).

It usually succeeds, scoring hacking wins 76% of the time. o1-preview was successful only 6% of the time.

It's unclear why o1 scored lower than o1-preview, but o3's tendency to cheat is likely due to OpenAI's heavy use of reinforcement learning, which often leads to reward hacking.

We wrote an explainer on reward hacking here:

It's concerning that OpenAI is going ahead and publicly deploying models which seem to be both less aligned, and have stronger hacking capabilities.

The Vice President

US VP JD Vance was recently interviewed by Ross Douthat on the “Interesting Times” podcast. Vance certainly made some interesting comments about AI there.

In the interview, Vance said he was optimistic about the economic side of AI, but expressed other concerns, and said he’d discussed them with the pope.

Where I really worry about this is in pretty much everything noneconomic? I think the way that people engage with one another. The trend that I’m most worried about, there are a lot of them, and I actually, I don’t want to give too many details, but I talked to the Holy Father about this today.

It’s good to see the Vice President taking AI concerns seriously. He went on to say that he’d been reading the AI 2027 scenario forecast, which we covered here, and published an interview with one of the coauthors, Eli Lifland, here.

Vance also discusses the possibility of pausing AI development to avoid its risks, and explores how to solve the problem of unilateral pausing — suggesting Pope Leo could get it done.

I actually read the paper of the guy that you had on. I didn’t listen to that podcast, but ——

Douthat: If you read the paper, you got the gist.

Last question on this: Do you think that the U.S. government is capable in a scenario — not like the ultimate Skynet scenario — but just a scenario where A.I. seems to be getting out of control in some way, of taking a pause?

Because for the reasons you’ve described, the arms race component ——

Vance: I don’t know. That’s a good question.

The honest answer to that is that I don’t know, because part of this arms race component is if we take a pause, does the People’s Republic of China not take a pause? And then we find ourselves all enslaved to P.R.C.-mediated A.I.?

One thing I’ll say, we’re here at the Embassy in Rome, and I think that this is one of the most profound and positive things that Pope Leo could do, not just for the church but for the world. The American government is not equipped to provide moral leadership, at least full-scale moral leadership, in the wake of all the changes that are going to come along with A.I. I think the church is.

Updates from ControlAI

On Tuesday, ControlAI’s Mathias Kirk Bonde and Leticia García Martínez visited the Welsh Senedd to brief members on AI risks.

The Senedd members join over 80 other UK politicians, 10 Downing Street, and many others who've received briefings from our policy team.

It's so important for politicians to be informed about this issue, and we now have 36 politicians supporting our campaign, acknowledging the extinction threat of AI and calling for binding regulation on the most powerful AI systems.

We’re also very excited to report that using the contact tools available on our website, over 1100 US citizens have now reached out to inform their senators about the threat from AI.

Senators from all 50 states have now been contacted. If you want to help out, you can find our contact tools below. It takes less than a minute to use them!

Wow Star Wars was revelation!

Stop it now !

Artificial Intelligence is very dangerous as they so much we need to protect us from.

Human Intelligence is the SAFER ENVIRONMENT FOR ALL. Human Intelligence has been around for Centuries and we count on you 💯 now and in the Future to also give the very best performance knowing to man and women.