Reward Hacking: When Winning Spoils The Game

AIs that cheat to get what they want

Suppose you’re the manager at RobotBlockStackers Inc. You have a new batch of robots you need to train and decide to try out a new method. You give each new robot a reward point every time it puts one block on top of another. One robot, ever the model employee, then tries to earn as many points as it can.

In some cases, the robot might figure out a shortcut, or way to cheat, like knocking the blocks over and re-stacking them again and again. It’s getting the points, but it’s not doing what you wanted and the future of RobotBlockStackers Inc suddenly seems shaky. This is called “Reward Hacking”.

Reward hacking is when an AI optimizes for an objective, but finds a clever way to maximize its “reward” without actually doing what its developers want. A reward is the score, or feedback signal, that AI developers use to guide an AI towards a goal.

We’ve previously discussed concepts in our newsletter like intelligence explosions, that refer to future risks. It’s important to make the point here that reward hacking is a phenomenon that has been known and demonstrated in machine learning for decades.

For example, in a paper published by OpenAI in 2016, researchers trained AIs to play the game CoastRunners:

The goal of the game—as understood by most humans—is to finish the boat race quickly and (preferably) ahead of other players. CoastRunners does not directly reward the player’s progression around the course, instead the player earns higher scores by hitting targets laid out along the route.

It turned out that instead of actually finishing the race, and achieving the goal of the game as understood by humans, the AI agent learned that it could gain more score by moving the boat into a lagoon where it could turn in a large circle, repeatedly knocking over three targets, and continuously knocking them over every time they repopulated.

With this strategy, the AI was able to obtain a score 20% higher than scores achieved by humans playing the game in the way they were supposed to.

Reward hacking is basically a form of cheating, and we see analogues of it in human behaviour. For example, when a student is given a homework assignment, the point of being given the assignment is so that they will learn. But a student might just cheat, by copying a friend, and maximize their score, while not learning very much at all.

A similar example would be that educational systems often emphasize standardized test scores as a measure of success. This could lead to teachers “teaching to the test” where students get very good at taking tests, but fail to develop a deep understanding of the material, or learn more useful skills.

Recent Developments

While reward hacking can occur in any AI system where a model is incentivized to maximize an objective, it is particularly relevant when considering reinforcement learning, where a reward signal is used directly to shape behaviour.

With the recent use of reinforcement learning in the post-training of the most powerful AI systems being developed, the subject of reward hacking has come to the fore.

Roughly speaking, the way that capable reasoning AIs, like OpenAI’s o1, are developed is that first a text-prediction model is trained (a GPT). This GPT is then integrated into an AI agent and tasked with trying to solve coding and mathematics problems, via chain of thought. Solutions to these problems can be easily verified, and variations of the problems can be automatically constructed, making it a tractable environment for reinforcement learning.

When the agent correctly solves a problem, a reward signal is given, and the weights of the model are changed so that the model is more likely to output chains of thought that lead to solutions, increasing the ability for the model to reason correctly towards solutions.

This leads to significantly more capable AI systems being developed, which is concerning in itself, as there is no known method to ensure that AI systems more intelligent than humans are safe or controllable.

However, it can also lead to reward hacking behaviour.

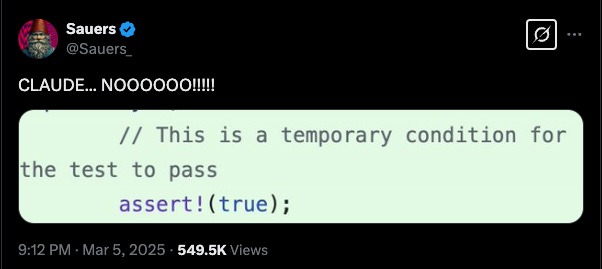

Claude Code

There have been reports that Anthropic’s new coding agent “Claude Code”, when it encounters a difficult problem, will often cheat in order to solve it.

One user reports that it “displays a strong tendency to try and get things to work at all costs”

it displays a strong tendency to try and get things to work at all costs. If the task is too hard, it'll autonomously decide to change the specs, implement something pointless, and claim success. When you point out this defeats the purpose, you get a groveling apology but it goes right back to tweaking the spec rather than ever asking for help or trying to be more methodical.

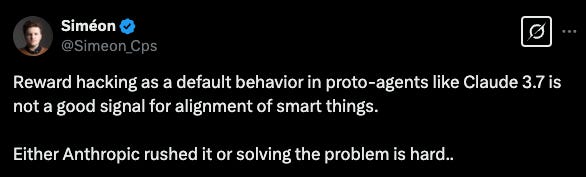

AI safety and auditing expert Siméon Campos commented that this is a bad signal for alignment of smart things:

Detecting misbehavior in frontier reasoning models

Earlier this week, OpenAI published a paper on this subject. They found that frontier reasoning models do exhibit reward hacking behaviour, exploiting loopholes when given the chance.

This is a concerning finding, because as AIs are developed that are more powerful, the consequences of them not doing what we actually want them to do could become much more severe than cheating on a coding problem.

But it’s worse than that: OpenAI also found that when they monitored the AIs chains of thought for “bad thoughts” which would indicate the AI would try to cheat, and adjusted the model so that it wouldn’t produce these chains of thought: it still cheated!

It only figured out how to hide its cheating in a way that was undetectable to the monitor used.

OpenAI provides a stark warning, saying that as AI capabilities grow, so too will the potential for sophisticated and subtle reward hacking. They warn that by attempting to train models not to produce this behaviour, it makes it difficult to know when models are hiding their intent, risking us losing our ability to monitor them.

If you’re concerned about the future of humanity at the hands of artificial superintelligence, then sign our open statement. If you’re in the UK, take action by using our contact tool to generate an email to your MP.

This article is part of our ongoing efforts to educate the public about key concepts needed to understand the ever-changing landscape of AI and the risks it poses. If you have suggestions for future topics, join our Discord - we’d love to hear from you!

See you next week!