AI Time Horizons Are Still Growing Exponentially

GPT-5 confirms this concerning trend is holding.

Welcome to the ControlAI newsletter! This week we're updating you on some of the latest developments in AI and what it means for you.

To continue the conversation, join our Discord. If you’re concerned about the threat posed by AI and want to do something about it, we invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

Table of Contents

ChatGPT-5

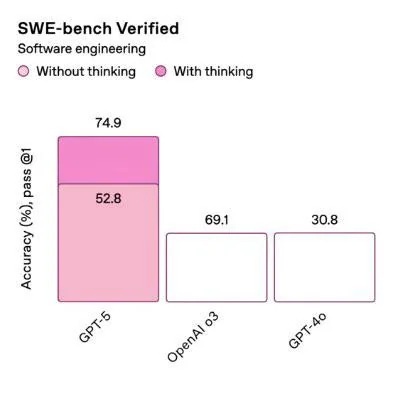

As we anticipated last week, OpenAI launched their newest AI, GPT-5, on Thursday. It was quite a mess, with their announcement stream showing off botched benchmark graphs like this:

Recursive Self-Improvement: Foreshadowing

During the stream, OpenAI’s Sébastien Bubeck made an interesting comment, where he said that the methods OpenAI used to train GPT-5 foreshadow a recursive self-improvement loop.

Ex-OpenAI researcher Steven Adler commented on Twitter that he was surprised that OpenAI comms would approve of this language, since in OpenAI’s Preparedness Framework recursive self-improvement is listed as a “Critical” risk, which they may respond to by halting further development.

Bubeck was referring to the way OpenAI used one of their recent AIs (o3) to generate high quality synthetic data that they used to train GPT-5 on. Adler notes that this isn’t an especially fast loop.

Recursive Self-Improvement — or more generally, AIs improving AIs — as OpenAI acknowledge in their own Preparedness Framework, could be an incredibly dangerous development. The risk is that you initiate an intelligence explosion, where AI systems rapidly become superintelligent through recursive improvement. AI developers could totally lose control of the process, unable to keep up, and given that we have no way to control superintelligent AIs, this could spell disaster.

We wrote a longer explainer on what an intelligence explosion means here:

The Autoswitcher and the Sycophant

As part of the deployment of GPT-5, OpenAI announced that they’d cut down the number of options users have to interface with ChatGPT, removing a bunch of older models (4o, o3, etc.) and allowing a system called an autoswitcher to take user prompts and determine whether to provide a response from OpenAI’s best AIs or from a weaker, cheaper model.

On launch day, it turned out this autoswitcher was broken, and it just gave people responses from the weaker AIs. This, along with marginal improvements on some benchmarks of AI capabilities, led to an underwhelming response from users.

This negative reception was compounded by the removal of 4o in particular. 4o was known to have sycophantic tendencies — often telling users only what they want to hear. There was a significant backlash against the removal of 4o on Reddit, as it turned out, there were large numbers of users who had become emotionally attached to the AI. OpenAI’s CEO Sam Altman eventually backed down and said that OpenAI would keep 4o, for now, on the paid version of ChatGPT.

OpenAI’s inability to actually de-deploy even what’s now a relatively weak AI raises concerns about their ability to pull future, more powerful AIs in the event that they cause significant damage.

AI Time Horizons are Still Growing Exponentially

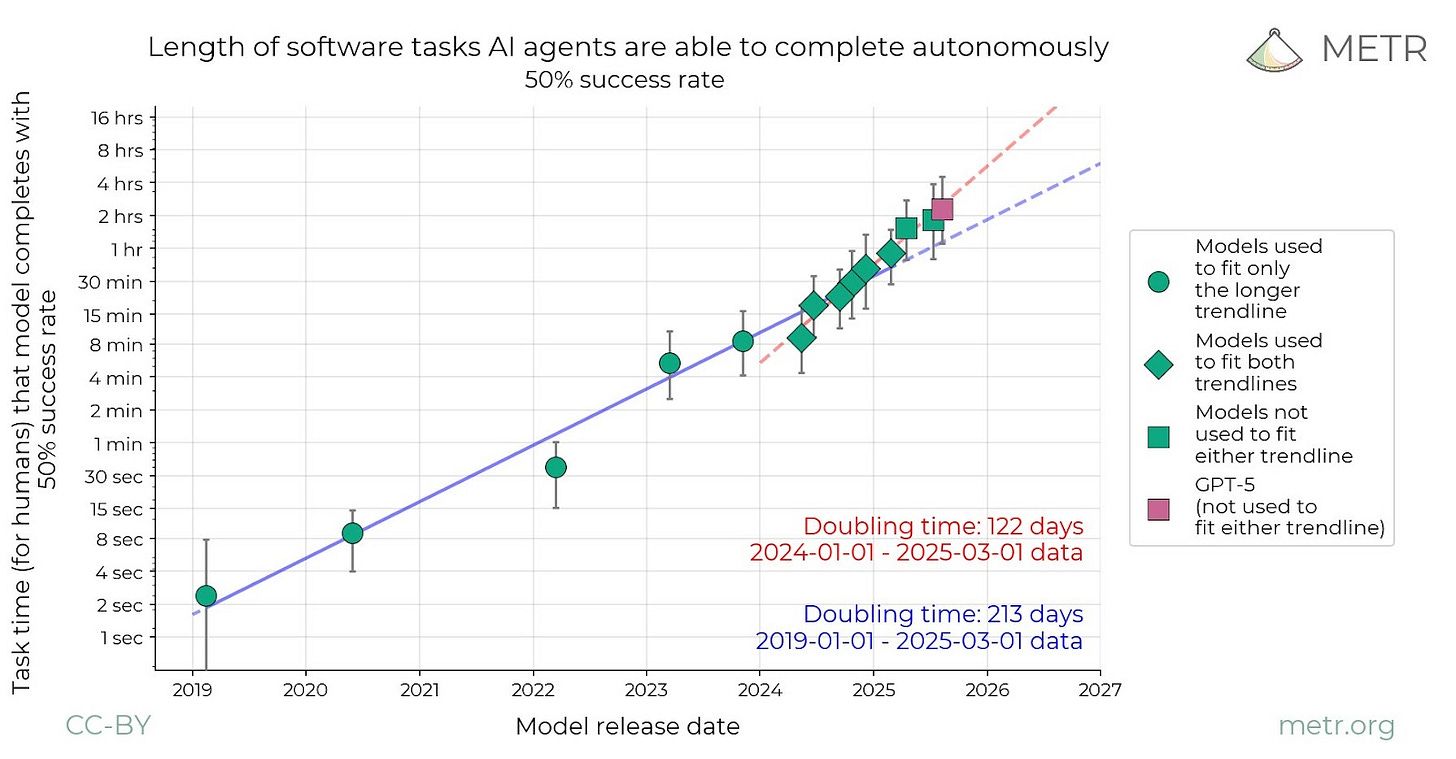

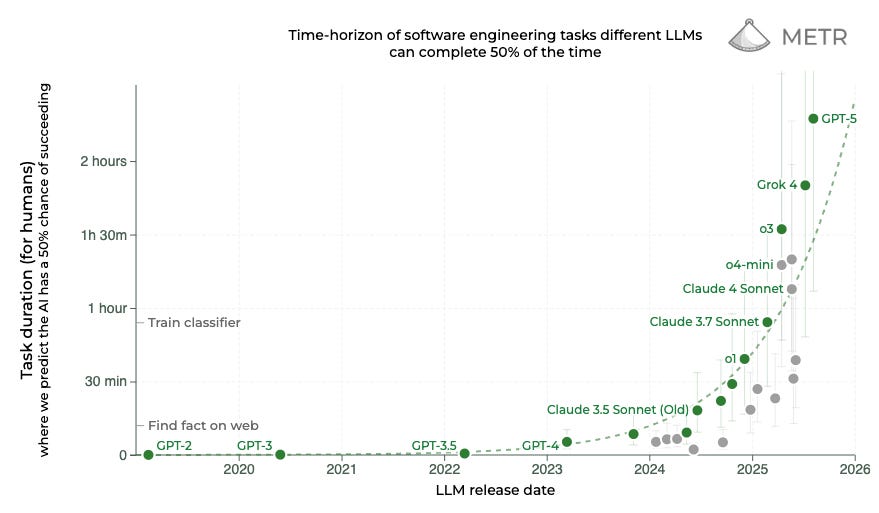

In March, METR, a research organization that studies AI capabilities, made a crucial finding that for the last 6 years the “time horizons” of AIs have been growing exponentially, doubling every 7 months.

An AI’s time horizon is the length of tasks humans can do that AIs can also do. In essence, METR got skilled humans and AI systems to attempt to perform tasks under similar conditions. They then measured how long it took humans to perform the tasks, and how the success rate of the AIs varied depending on how long it took the humans to perform the tasks. An AI time horizon of 2-hours with a 50% success rate means that in 50% of tries, AIs can do tasks that take skilled humans 2 hours to do.

This is easy to get confused, so we reiterate that the time corresponds to how long it takes humans to do those tasks. AIs can do things much faster than humans, but on long-horizon tasks, those that take humans a long time to do, the AIs fall off.

METR have published their research on GPT-5, and notably found that GPT-5 has a time horizon that is on-trend.

Concretely, what they found is that GPT-5 can do coding tasks with a 50% success rate that take skilled humans 2 hours and 15 minutes to do.

METR says that this appears to be on-trend, and we can also see this by glancing at their graph. They also note that it appears to be a closer fit to the more recently observed 4-month time-horizon doubling time, which has been seen since the launch of OpenAI’s o1-preview AI last September.

To spell that out: if the trend continues to hold we should expect to see AIs with not 2-hour time-horizons in 4 months, but 4-hours, and 4 months later 8-hours, and then 16-hours, and so on.

So AIs are rapidly getting better at coding, so what?

Well, the coding tasks they're measuring this on have particular relevance to AI R&D, drawing in large part from the RE-Bench and HCAST benchmarks. RE-Bench is specifically relevant to AI engineering implementation, including tasks like optimizing a kernel or fine-tuning GPT-2.

AI engineering/coding is only one part of the AI R&D loop, but the fact that AIs are rapidly getting better at the sort of tasks needed for this could have significant implications.

In the AI 2027 scenario forecast by top experts Daniel Kokotajlo et al., there is one particularly crucial and pivotal moment. This is when the leading AI company dubbed "OpenBrain" develops superhuman coders.

Superhuman coders are AIs that can do any coding tasks that the best AGI company engineer can do, while being much faster and cheaper. AI time horizons and their growth rate could be crucial for when this point might be hit.

Despite coding only being one part of the AI R&D loop, AI 2027's authors estimate that having superhuman coders would 5X the research velocity of the leading AI company. This is partly because coding will just be done so much faster: so many more small experiments can be run and so on, but also because they would likely have some other qualities of AI company researchers and engineers besides coding (e.g. "research taste").

Something like a 5x speedup of AI R&D would be huge, and AI 2027's authors estimate this cuts the expected time until the next milestone ("Superhuman AI Researchers") from years to just a few months. From there, things only accelerate even faster - an intelligence explosion occurs.

This intelligence explosion of AIs improving AIs rapidly leads to artificial superintelligence, AI vastly smarter than humans. Importantly, nobody knows how to ensure that such AI would be safe or controllable. Experts have warned that it could result in human extinction.

In summary, GPT-5's AI time horizon confirms that the trend of AIs rapidly improving on coding is still holding at the moment. Exponentials are notoriously difficult to form intuitions about. What seems like no big deal one day can become a massive deal a short period of time later.

The International Olympiad in Informatics

This week, OpenAI announced that they’ve just used an AI to score a gold medal on the International Olympiad in Informatics, the world’s most prestigious coding competition for high schoolers.

Only a few weeks ago, we wrote about how OpenAI and Google DeepMind had done the same with the International Mathematical Olympiad, the equivalent contest in mathematics.

Last year, OpenAI built a specialized system to compete in the IMO, and “only” got a 49th percentile score (49th percentile against these students is still very impressive), but their new one came 6th out of over 300 contestants. This is a huge jump.

Notably, their new result was done without training a custom model for the contest, instead OpenAI used an ensemble of general-purpose AIs. This result adds to a growing mountain of evidence that AI capabilities are advancing rapidly across the board.

More AI News

Here are some other developments we spotted.

AI godfather and Nobel Prize winner Geoffrey Hinton went on CNN to warn about the extinction threat to humanity posed by AI. He said that “we’ll be toast” if we don’t figure out how to make it safe.

Brian Tse wrote an important article in Time on the state of AI safety in China and the potential for collaboration with the United States on AI risks.

The Future of Life Institute launched a new site visualizing 13 interactive expert-forecast scenarios showing how AI could transform the world.

As the competition for talent in AI development heats up, fuelled by Mark Zuckerberg’s hiring spree, OpenAI is reportedly giving some employees multimillion-dollar bonuses.

Take Action

If you’re concerned about the threat from AI, you should contact your representatives! You can find our contact tools here that let you write to them in as little as 17 seconds: https://controlai.com/take-action

Thank you for reading our newsletter!