How Could Superintelligence Wipe Us Out?

Where we are now, where we might go, and how it could end.

There’s growing agreement among experts that the development of artificial superintelligence poses a significant risk of human extinction, perhaps best illustrated by the 2023 joint statement by AI CEOs, godfathers of the field, and hundreds more experts:

But how would this happen? How does one go from where we are today to human extinction?

Here we seek to briefly explain one way it could happen, drawing on the AI-2027 scenario forecast (Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifland, Romeo Dean), which is widely regarded as the best-researched and most detailed work on this question. If you want to see our interview about AI 2027 with one of its coauthors, Eli Lifland, you can find it here.

If you find this article useful, we encourage you to share it with your friends! If you’re concerned about the threat posed by AI and want to do something about it, we also invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

Table of Contents

The Present

AIs already rival and surpass humans in a number of domains. They get gold medals in the most prestigious high-school-level coding and math competitions, they can predict structures and interactions among proteins, and they surpass humans on image recognition benchmarks. Their general knowledge far exceeds anything a human could memorize. Computer-use AI agents are starting to become useful.

Recently, OpenAI has developed an AI that they say could meaningfully assist a novice in creating a known biological threat, and Anthropic has revealed in their Threat Intelligence report that their AIs have already been weaponized by bad actors to perform sophisticated cyberattacks.

Development is advanced mainly by 3-5 companies, including OpenAI, Anthropic, and Google. These companies are engaged in a race to build artificial superintelligence - AI that is vastly smarter than humans, across all domains.

“Humanity is close to building digital superintelligence, and at least so far it’s much less weird than it seems like it should be.

…

OpenAI is a lot of things now, but before anything else, we are a superintelligence research company.”

It might surprise you that despite the CEOs of these companies having warned of the extinction threat posed by AI — which comes from artificial superintelligence — their companies are racing ahead anyway, continuously releasing new AIs that advance the frontier. There are multiple reasons for why they’re racing, here are two:

Ideological: They want to build superintelligence so that they can create their ideal world, and they worry that if they don’t build it first, someone else will, and the future will be determined by those other (bad) people.

Economic: We’re seeing tremendous amounts of resources deployed in AI infrastructure investments (e.g., Microsoft plans to spend $80B on datacenters this year, the $500B Stargate plan). In order to be attractive to investors, and just to stay in the game, it’s necessary for these companies to be seen as leaders. And investors, of course, want to see returns.

Importantly, this racing dynamic means that AI companies are incentivized to prioritize rapid development over taking the time to ensure their systems are actually safe.

Many experts believe that artificial superintelligence could be developed in the next 5 years.

The Problem

It might not be intuitively obvious that racing to build superintelligence could result in the end of the human species.

The problem is that nobody knows how to ensure that AIs smarter than humans will be safe or controllable.

Unlike normal code, modern AIs are not designed by programmers. They’re grown, more like biological creatures. Billions of numbers are dialed up and down by a simple learning algorithm that processes their training data, and from this emerges a form of intelligence. Nobody really knows how to interpret what these numbers mean. Some scientists are working on it, but the field is nascent.

These AIs learn goals, but we don’t have any way to actually set the goals or verify them. Recently, frontier AIs have been shown to exhibit self-preservation tendencies, with one example being OpenAI’s o3 being found to sabotage mechanisms to shut it down in tests, even when explicitly told to allow itself to be shut down.

This is known as the alignment problem, which we explain here:

So superintelligence might be developed in just the next few years, and we don’t have any way to ensure that it’s safe, controllable, or that it wants to pursue our goals.

Research in the field of how to ensure that it is safe is in a lamentable state. The Future of Life Institute’s independent panel of AI experts recently found that none of these AI companies have anything like a coherent plan for how they'll prevent superintelligence ending in disaster.

Acceleration

The most plausible direct path to superintelligence is via what’s called an intelligence explosion. In this scenario, an AI company builds an AI that is so capable that it can meaningfully accelerate the company’s research velocity towards building even better AIs, which accelerates it even more.

We wrote an explainer on what an intelligence explosion is here:

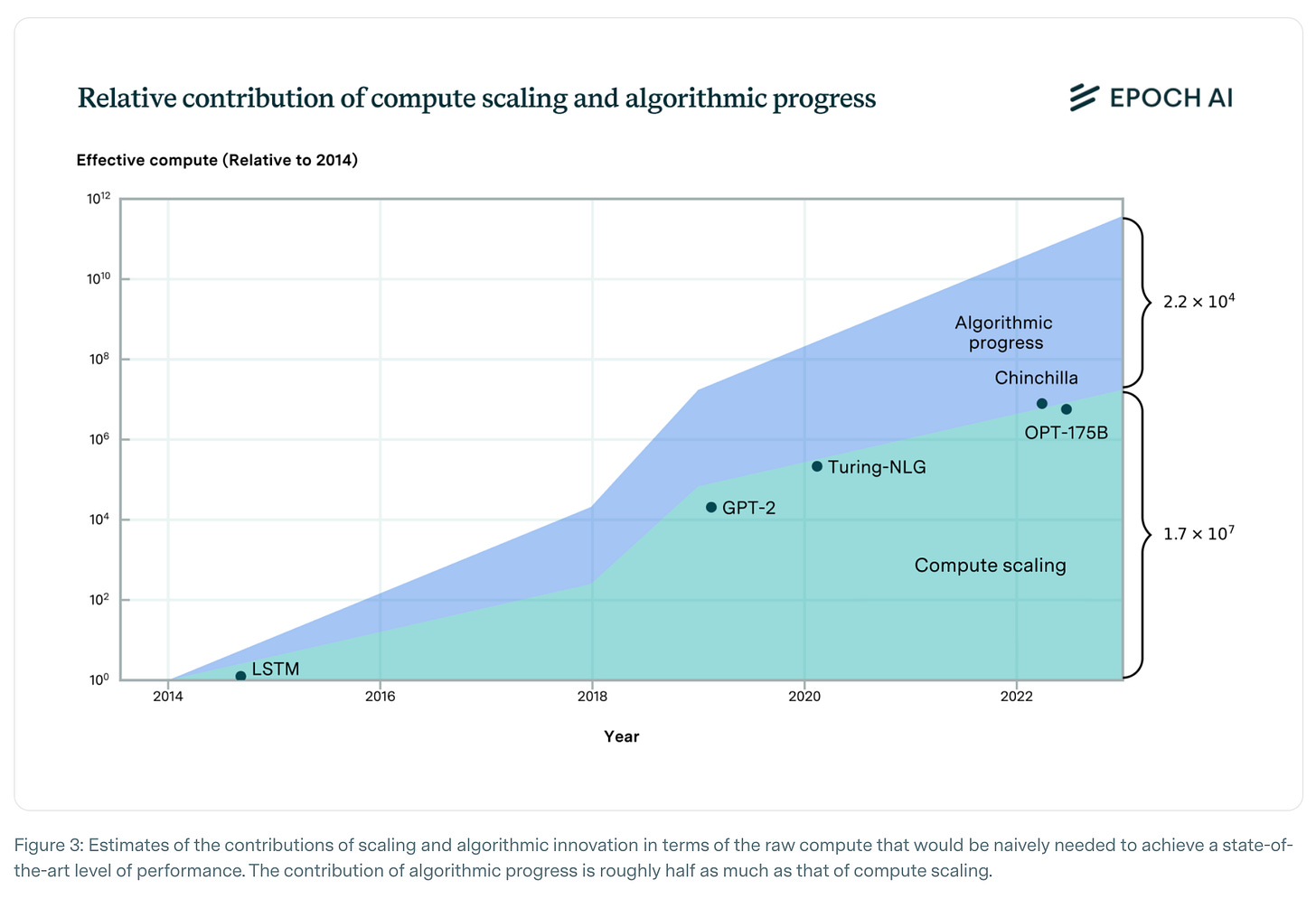

Epoch finds that historically the contribution of algorithmic progress, the process of finding ways to improve AIs via innovation, is about half that of simply scaling the amount of computation used to train AIs — a sizable contribution. They say that the amount of algorithmic progress occurring is equivalent to a doubling of computational power every 5 to 14 months.

An intelligence explosion would mean that this rate of progress would massively increase.

AI 2027 conceptualizes this critical moment as when “superhuman coders” are achieved. These are AIs that are as strong at coding as the best coders at an AGI company. The authors estimate that this would result in a 5x speed-up in research velocity of the company. In other words, what would have previously taken 5 years to do would take just a year.

Once the intelligence explosion is in motion, development would only accelerate faster. AIs that are as capable as the best researchers could be developed in just a few months, and superintelligence would likely follow shortly after.

As AIs accomplish research projects in fractions of the time it would take humans to do them, it would be incredibly easy to lose control and oversight of the process. If the AIs building better AIs begin with goals that are incompatible with ours — and there is reason to believe they would, current AIs already exhibit self-preservation tendencies, and we would like to be able to replace them (remember, we cannot currently set or verify goals of modern AIs) — they would be incentivized to try to bake their goals into each next generation, and deceive us about how safe they are.

Okay, but if we can’t ensure control of AIs smarter than humans, wouldn’t the AI companies just stop, or slow down? Wouldn’t they invest more in safety research? This is somewhat doubtful, given that they’re locked in a racing dynamic. Those that slow down would simply “lose” the race to less responsible actors.

What about governments, would they step in? Maybe, and supporting this is a focus of our work here at ControlAI. But this is not guaranteed. With AI CEOs Dario Amodei and Sam Altman’s calls for an AI arms race with China, AI companies are already laying the groundwork for a tremendous get-out-of-jail-free card.

In a scenario where the AI race expands from a race between private AI companies to a full throttle US-China race to control the future, governments could become increasingly reluctant to hit the brakes.

Takeover and Extinction

Once an artificial superintelligence has been developed that doesn’t share our goals or care about us, this poses two questions.

Why would it try to take over?

Whatever goals it actually does end up with, it would likely be useful to acquire more resources and power in order to achieve those goals. Humans would pose a potential obstacle here, at least initially having the ability to shut down the superintelligence. In order to remove this threat, it would be necessary to, at a minimum, disempower us and take control. We would be an obstacle to be overcome.

Given that it would not care about us, in its quest for more resources, we could end up like an anthill on a building site. The optimum percentage of the planet covered in solar panels, temperature of the atmosphere, or allocation of energy for humans seems very unlikely to be the same as for AIs.

How would it try to take over?

There are a number of ways that it could happen, and the methods that we mere mortals can think of wouldn’t necessarily be the methods chosen by an entity much more intelligent than we are. The driving intuition here is that intelligence grants power, and if you are facing a much more intelligent being than yourself, you are probably going to lose.

However, we might guess that one useful condition for takeover would be that humans would no longer be necessary for the operation of the parts of the economy that AI infrastructure relies on. This would include things like mining, maintenance of power grids, energy production, and so on.

For this reason, it would likely be desirable for a misaligned superintelligence to first build the capability to automate large parts of the economy through robotics. This could happen automatically through economic incentives. In AI 2027 it happens in the context of a US-China arms race.

Once this has been achieved, getting rid of us could be as simple as designing and deploying a powerful bioweapon, and dealing with any survivors with killer robots, which is roughly what happens in AI 2027.

Thank you for reading this article. If you found it useful, it would be great if you shared it with friends!

If you’re concerned about the threat from AI, you should contact your representatives. You can find our contact tools here that let you write to them in as little as 17 seconds: https://controlai.com/take-action.

We also have a Discord you can join if you want to help humanity stay in control!

It’s not the "super intelligence" that will end most of humanity, we will be long gone by then, it’s the self checkout, the robot floor cleaner that also does inventory, the robot server in your restaurant, the robot fri cook, the AI teacher, the self driving tuck and taxi, teachers, the AI accountant, lawyer, police officer! Every one of those takes someone’s job, they already have AI road equipment, AI farms, AI doctors, surgeons and dentists! What will YOU do to put food on your table? Any job you can think of can be done by a robot linked to AI! Businesses will collapse due to a customer base that can no longer afford luxuries like food and housing! You can’t pay the tax on your house? The state will gladly take it! AI and robotics must be limited BY LAW! People must be allowed to earn a living

If AI does our thinking for us what is the incentive to learn, pass exams, work for degrees. With AI running the world, mankind stands the risk of becoming lazy, unmotivated, bored, angry and dissatisfied. A dangerous combination. Stop concentrating on the problems of AI and think of what it will do to the human race.