Red Lines

“Governments must act decisively before the window for meaningful intervention closes.”

Welcome to the ControlAI Newsletter! With the release of “If Anyone Builds It, Everyone Dies”, our new campaign, and now a tremendous coalition of leaders and experts backing a call for global red lines on AI, September is looking to be one of the biggest months in the fight to keep humanity in control. We’re here to keep you updated!

If you find this article useful, we encourage you to share it with your friends! If you’re concerned about the threat posed by AI and want to do something about it, we also invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

Table of Contents

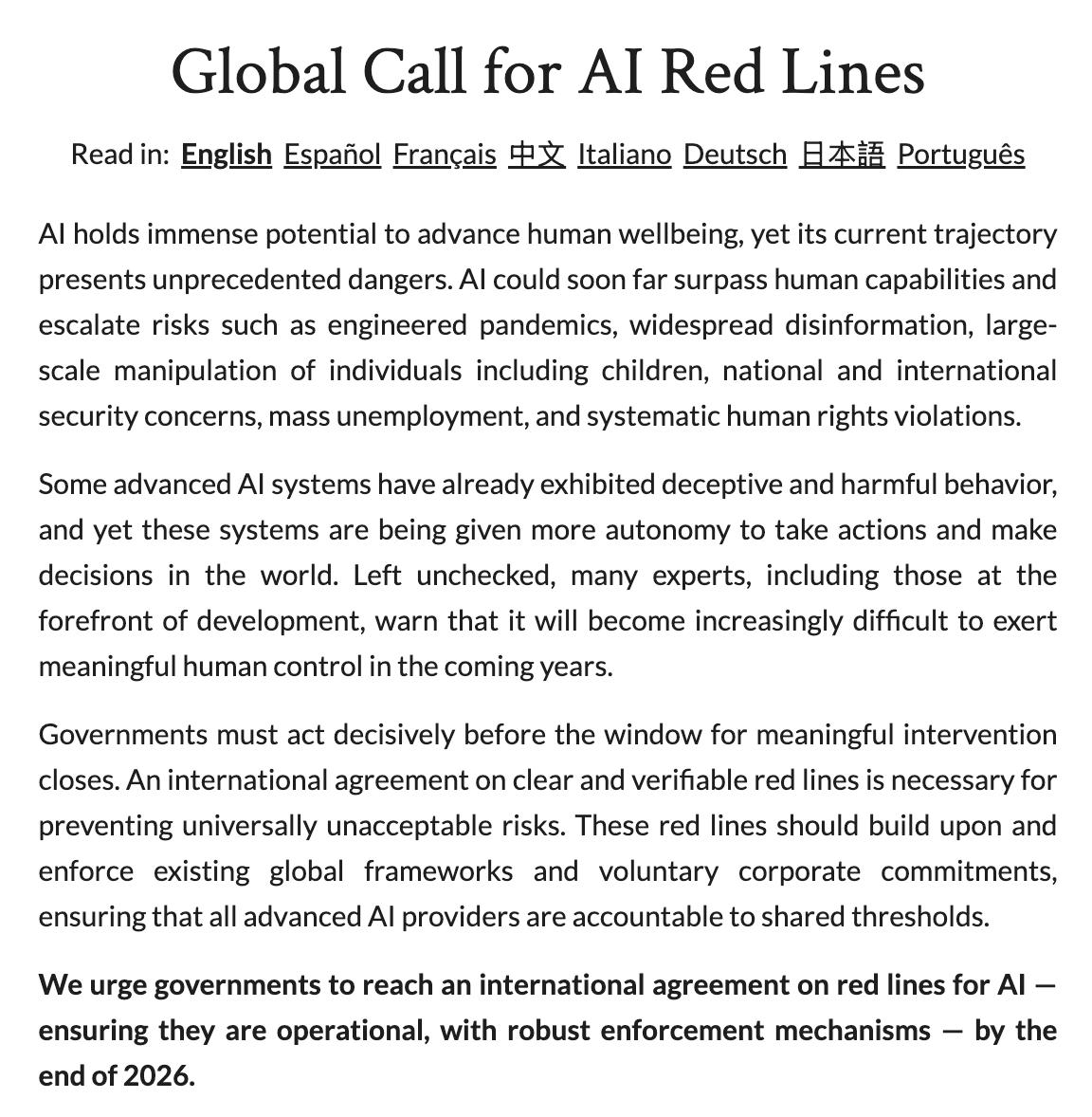

The Global Call for AI Red Lines

On Monday, Nobel Peace Prize laureate Maria Ressa announced a new initiative at the UN General Assembly: the Global Call for AI Red Lines:

“We urge your governments to establish clear international boundaries to prevent universally unacceptable risks for AI.”

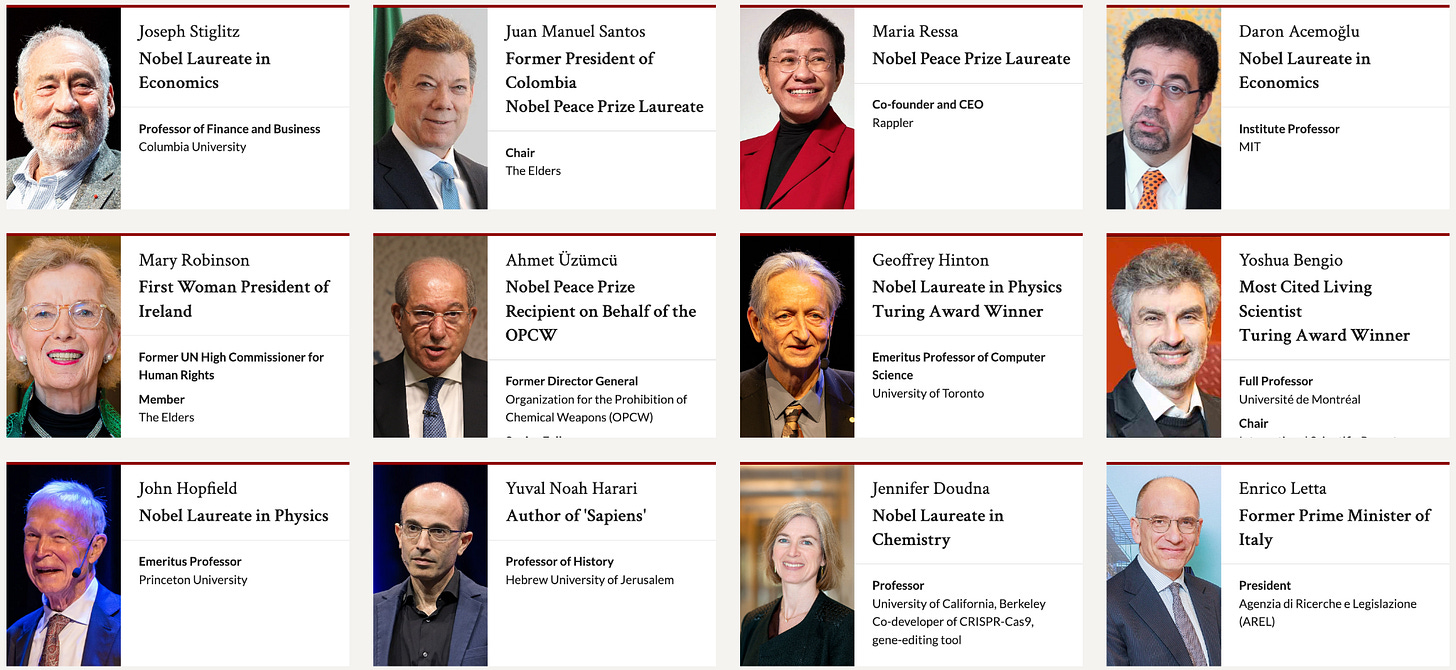

This is a joint statement backed by an unprecedented coalition. Supporters include 9 former heads of state and ministers, 10 Nobel laureates, 70+ organizations, and over 200 public figures and experts.

The statement warns of the unprecedented dangers posed by the current trajectory of AI development, highlighting that AI could soon far surpass human capabilities, and that AIs already exhibit deception and harmful behavior.

Left unchecked, many experts, including those at the forefront of development, warn that it will become increasingly difficult to exert meaningful human control in the coming years.

The call is clear: Governments should reach and enforce international red lines for AI by the end of 2026. That’s 462 days, as of today.

It’s hard to overstate the scale of the coalition assembled in favor. Here are just a small fraction of the signatories. They include the former presidents of Ireland and Colombia, the most cited living scientist, a former prime minister, and 7 Nobel laureates!

Industry insiders are backing it too. 34 employees at top AI companies have signed in support. This includes OpenAI Co-founder Wojciech Zaremba, OpenAI’s Chief Scientist Jakub Pachocki, Jason Clinton – Anthropic’s Chief Information Security Officer, Google’s Director of Engineering Peter Norvig, Microsoft Research’s Senior Principal Researcher Kate Crawford, and many more!

At ControlAI, we’re also proud to support this initiative as an official partner. To prevent the worst risks of AI, we need global red lines on AI development.

It’s been amazing to see the coverage this initiative has gotten, with over 300 media mentions, including articles in top news outlets.

Which Red Lines?

The statement doesn’t specify what the red lines should be. So this raises the question of what limits on AI should countries set? At ControlAI, our focus is squarely on the extinction threat to humanity posed by artificial superintelligence - AI vastly smarter than humans.

We recently launched a new campaign to ban superintelligence. With our new site, we’ve created a central hub where concerned citizens, civil society organizations, and public figures can learn about the extinction risk posed by superintelligence and join the call to prohibit it.

So if you want to learn more about why experts are so concerned, check out our campaign site:

https://campaign.controlai.com

Given the danger posed by superintelligence, we believe that one clear red line should be drawn: the development of artificial superintelligence should be prohibited globally.

However, in order to ensure this, we believe a number of other red lines and policies are necessary. A simple prohibition is a positive step, but it needs to be clearly enforceable.

Last year, we detailed what we think these red lines should be in our comprehensive policy plan for humanity to survive AI and flourish, A Narrow Path.

In addition to prohibiting the development of artificial superintelligence, we propose the following:

1. Prohibit AIs capable of breaking out of their own environment

The ability for an AI to break out of its environment, e.g., by hacking, would undermine all other safety guarantees and security measures around AIs. Prohibiting the development of AIs capable of this would also remove the root cause of a common expert concern, the ability for AIs to self-replicate, since AI development and interventions would be required to block a self-replicating model from escaping to systems not controlled by the company operating the AI.

2. Prohibit the development and use of AIs that improve other AIs

AIs improving AIs is the clearest way for AI systems or their operators to bypass limits placed on their general intelligence. This process could initiate a dangerous intelligence explosion, quickly enabling runaway feedback loops that bring an AI system from a manageable range, to levels of competence and risk far beyond those intended.

3. Require a valid safety case for deployment of AI systems

For sufficiently powerful AI systems, we need to know, before training them, let alone running them, that they will not cause a catastrophe. AI developers should show through the use of safety cases – the standard high-risk industry method – that their AI systems are bounded, providing evidence that the AIs they intend to develop are not dangerous, and will not breach prohibited capability levels.

We propose that these should be implemented both nationally and internationally, through domestic regulation and international treaty. We provide clear ways that these could be enforced.

In addition to these red lines, we also propose limits on the amount of compute used for training AIs — in order to keep capabilities at safe levels, and the establishment of three international institutions to enforce and facilitate safe AI development.

You can read more about why we think these measures are necessary and how they could be enforced here: https://www.narrowpath.co

Next Steps

It’s great to see that such a coalition has been assembled in support of what’s ultimately a very common sense idea: There are certain things that AIs must never do, and these should be prevented. Dangerous AI development anywhere, endangers everyone everywhere. Therefore, international agreement and cooperation is needed. There are surely some risks upon which even geopolitical rivals can agree we need to avoid. For example, it’s in no country’s interest for humanity to go extinct.

This must be followed through with concrete action by policymakers and world leaders. With experts saying that artificial superintelligence could be built within the next 5 years, there is no time to waste.

In order to help make this happen, we’re asking you to reach out to your representatives and let them know that this is important to you. We have contact tools on our website that cut the time of doing so down to mere seconds. Thousands of citizens have already used them. Check them out here!

https://controlai.com/take-action

More AI News

California Senate Bill 53 Update

A couple of weeks ago we wrote about the draft AI transparency bill that was making its way through California’s legislature.

Since then, it’s been voted through by California’s Assembly and Senate and sent to Governor Newsom’s desk to either approve or veto.

Yesterday, Governor Newsom hinted that he would sign it, saying he’d sign a major piece of AI legislation that he said “strikes the right balance”.

Senator Scott Wiener, who wrote the bill, said that Newsom may have been referring to SB 53, but that he didn’t want to be presumptuous. Listening to Newsom’s remarks, though, it seems pretty clear that he’s referring to SB 53. We also found a prediction market where it appears that Newsom’s comments increased the implied probability that SB 53 would become law from 79% to 98%.

If Anyone Builds It, Everyone Dies

In some more positive news, Eliezer Yudkowsky and Nate Soares’ new book “If Anyone Builds It, Everyone Dies” has made the New York Times bestseller lists — released last Tuesday.

Last week, we wrote about some of the amazing reviews it’s gotten from leading experts in AI and beyond.

The clue for what the book is about is in the name. “If Anyone Builds It, Everyone Dies” clearly explains the extinction threat posed by the development of artificial superintelligence.

We think that informing the public about this danger is one of the most pressing issues, and have made it the focus of our new campaign. In order to address the problem, people have to know about it! So we think it’s great that so many are reading the book.

If you want to read it too, you can get it here:

https://ifanyonebuildsit.com

Take Action!

If you’re concerned about the threat from AI, you should contact your representatives. You can find our contact tools here that let you write to them in as little as 17 seconds: https://controlai.com/take-action.

We also have a Discord you can join if you want to connect with others working on helping keep humanity in control, and we always appreciate any shares or comments — it really helps!

100% behind your proposed red lines. I am looking forward to seeing them in formal policy and technical proposals! Thank you for all your hard work.

Fully understand need for controls - the faster the better.

Warnings, as always, have been around for far too long however, the business model that runs world economies isn't interested if it holds up increasing wealth in an increasingly unstable world.