When the Hacker is AI

AI just helped run a highly sophisticated real-world cyber-espionage campaign at scale.

Welcome to the ControlAI newsletter! This week we’re going in depth on the news that Anthropic’s Claude AI was used to run a wide campaign of cyberattacks across government and industry. We’ll break down what happened and what it means.

Table of Contents

If you find this article useful, we encourage you to share it with your friends! If you’re concerned about the threat posed by AI and want to do something about it, we also invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

And if you have 5 minutes per week to spend on helping make a difference, we encourage you to sign up to our Microcommit project! Once per week we’ll send you a small number of easy tasks you can do to help. You don’t even have to do the tasks, just acknowledging them makes you part of the team.

The First AI Cyber-espionage Campaign

AI company Anthropic’s Threat Intelligence team have released a concerning new update about how Anthropic’s Claude AI is being used in the real world: They’ve discovered the first documented case of a cyberattack mostly completed without human input, and performed at scale.

The Threat Intelligence team is a group of researchers at Anthropic that investigate and analyze sophisticated misuse of Anthropic’s AIs, including their use to perform cyber attacks. Previously, we wrote about their findings that agentic AIs are being weaponized by cyberthreat actors, but this represents a “significant escalation” on the activities they identified in that report.

What happened?

Anthropic say that in mid-September they detected a cyber espionage operation which they’ve attributed to a Chinese state-sponsored group. Calling it a “fundamental shift” in how advanced threat actors use AI, Anthropic say the operation involved multiple targeted attacks happening at the same time, with about 30 entities targeted.

Among these entities, the hackers — or rather, the AIs — were able to successfully get access to high-value targets, including major tech companies and government agencies. Other targets included financial institutions and chemical manufacturing companies.

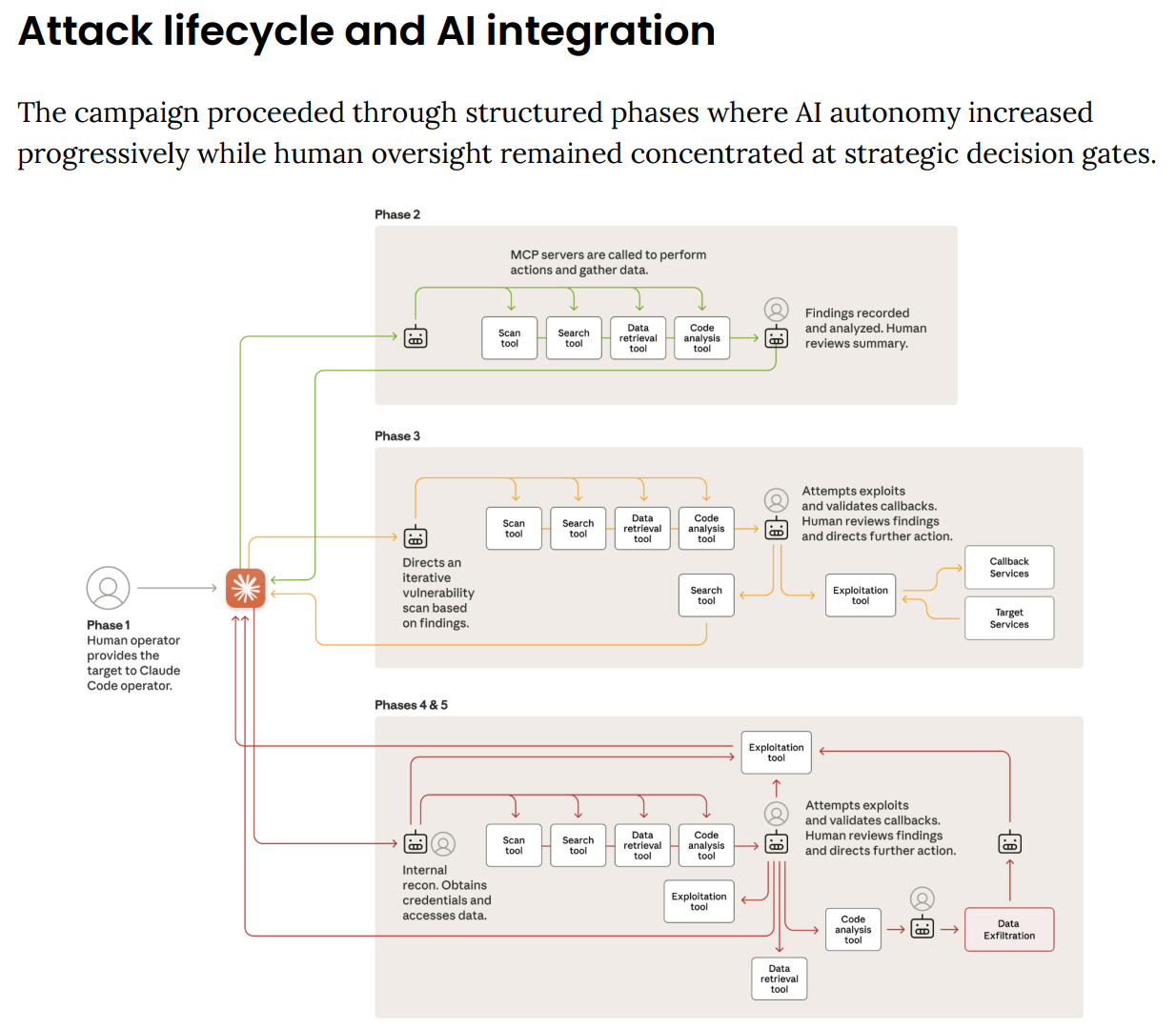

The attackers did this by “manipulating” Anthropic’s Claude Code AI agent into doing what Anthropic estimate was 80 to 90% of the work necessary to run the attacks. Claude Code is an AI that specialises in writing code, meaning that when its safety mitigations are bypassed, it can be used to hack into other computers. Human input was used for some key decision points, but was rarely needed. Anthropic were able to disrupt parts of the campaign, but some attacks were successful.

The attacks took on a structure of several phases, from target selection, through to attack surface mapping, vulnerability discovery, credential harvesting, data collection, and analysis and documentation. At its peak, the AIs were sending thousands of requests.

Anthropic were able to observe all this because the humans coordinating the attacks chose to use their Claude Code AI. The attackers were using Anthropic’s AI hosted on servers where they could monitor it.

What does this mean?

This is a clear demonstration that current AIs already massively reduce the cost to run large-scale sophisticated cyberattacks in the real world. AIs are becoming reliably more capable over time, including across dangerous capabilities like hacking and the ability to assist bad actors with the development of biological threats.

In their report, Anthropic justify continuing to build more dangerous AI systems in this domain by saying that these same capabilities will enable organizations to harden their defenses against these types of attacks. This can only make sense if organizations are able to leverage these capabilities before they are attacked, which remains an open question.

In cybersecurity there is what’s called an “offense-defense balance”. As AIs improve at hacking, it could shift the balance in favor of attackers, particularly over the short to medium term before defenders are able to catch up, making it much easier for bad actors to attack organizations and individuals successfully. This seems to be what is occurring currently.

Just this week, the content delivery network Cloudflare went down for a few hours. All indications are that this wasn’t even a cyberattack. Nevertheless, it had the effect of putting thousands of sites and services across the internet offline, including Twitter and ChatGPT. Imagine how bad it could be if we had swarms of capable AIs trying to make events like this happen all the time.

Another issue this raises is that of the risk from “open-weight” AI systems. With open-weight AIs, instead of the AIs on running servers managed by Anthropic or another AI company, the AIs are made available for anyone to download and run on their own computer instead. Unlike in this case, where Anthropic could see and disrupt some of the activity because the attackers used Claude on Anthropic's infrastructure, open-weight AIs can be run entirely on private hardware, making similar attacks much harder to detect or interfere with.

It’s also much easier to remove safety measures when you have access to the AI’s model weights. The most capable open-weight AIs currently seem to be a few months behind the best proprietary AIs developed by companies like OpenAI, Anthropic, and Google DeepMind.

Open-weight AI makes it harder to disrupt attackers, but Anthropic’s report indicates that even with the AI running on servers they manage, they failed to completely prevent the attacks succeeding.

The deeper picture

Ultimately, Anthropic’s inability to prevent their AI being used for malicious purposes partially stems from a perennial problem in AI development: AI developers are not able to ensure that the AIs they build do what they want.

When Anthropic developed Claude, they didn’t want it to be really good at hacking computers. But they didn’t really develop it in a traditional sense of the word. Modern AIs are more grown than they are developed or built. A simple learning algorithm running on vast datacenters, using tremendous amounts of data, dials billions of numbers up and down (the model weights) until a kind of mind is created.

AI researcher Nate Soares has likened this to farmers breeding cows. You can get some traits you like, but you don’t really have control over what’s going on. Developers further try to “tame” the AI with additional data and what’s called reinforcement learning, and try to enhance certain capabilities like coding and reasoning.

But there is nowhere where we can look inside the AI and find a line of code that says “Be willing to help hack stuff”.

This poses a problem in terms of developers’ inability to prevent their AIs being willfully misused like Claude was here.

Worse, the underlying problem of AI developers lacking the ability to fully control what their AIs do also means we have no way to ensure that smarter-than-human AIs don’t turn against us. Their ability to do this for current AIs is limited, ensuring this for the harder problem of superhuman AIs is something nobody even has a good plan for.

The possibility that superintelligence — AIs vastly smarter than humans — could turn against us, and even lead to human extinction, is a serious concern that countless AI experts have warned of. Just a few weeks ago, the Future of Life Institute published a statement signed by many of the top experts in the field, including AI godfathers Yoshua Bengio and Geoffrey Hinton, calling for the development of this technology to be prohibited. Among the reasons the authors cited for doing this was the possibility that it could lead to human extinction.

At ControlAI, we’re proud to have helped out with this initiative and to have been early signatories.

You can read more about this call and why experts are so concerned here:

Unfortunately, developing superintelligence is an explicit goal of AI companies like Anthropic, and ChatGPT-maker OpenAI.

ControlAI: Update

This week, our team’s been at the Capitol for a series of meetings with US lawmakers about the extinction risk posed by AI and what we can do to prevent it.

Informing lawmakers about the danger of superintelligence is a key part of our campaign, so this is really exciting!

Weekly Digest

New AIs

AI companies Google, OpenAI, and xAI all deployed new AIs this week that advance in capabilities beyond their previous systems.

Early indications show that Google’s Gemini 3 has made significant progress across a series of benchmarks used to measure how capable an AI is, while OpenAI’s GPT-5.1 appears to be on-trend on the crucial measure of an AI’s “time horizon”

Preemption

The White House is reportedly preparing an executive order that uses the US federal government to prevent states from regulating AI. A few months ago, Congress rejected this measure when it was introduced as part of the Big Beautiful Bill.

These are important stories, so we might go more in depth on them next week.

Take Action

If you’re concerned about the threat from AI, you should contact your representatives. You can find our contact tools here that let you write to them in as little as 17 seconds: https://campaign.controlai.com/take-action.

We also have a Discord you can join if you want to connect with others working on helping keep humanity in control, and we always appreciate any shares or comments — it really helps!

awesome! that is immensely useful and informative

thank you

Thank you for this information!