The Call to Ban Superintelligence

“We call for a prohibition on the development of superintelligence”

Welcome to the ControlAI newsletter! This week we’re bringing you some very important news, which is that an incredible coalition has made a call to prohibit the development of artificial superintelligence. We’ll explain why this call’s been made, who’s signed it, and what you can do to can help protect humanity from this threat.

Table of Contents

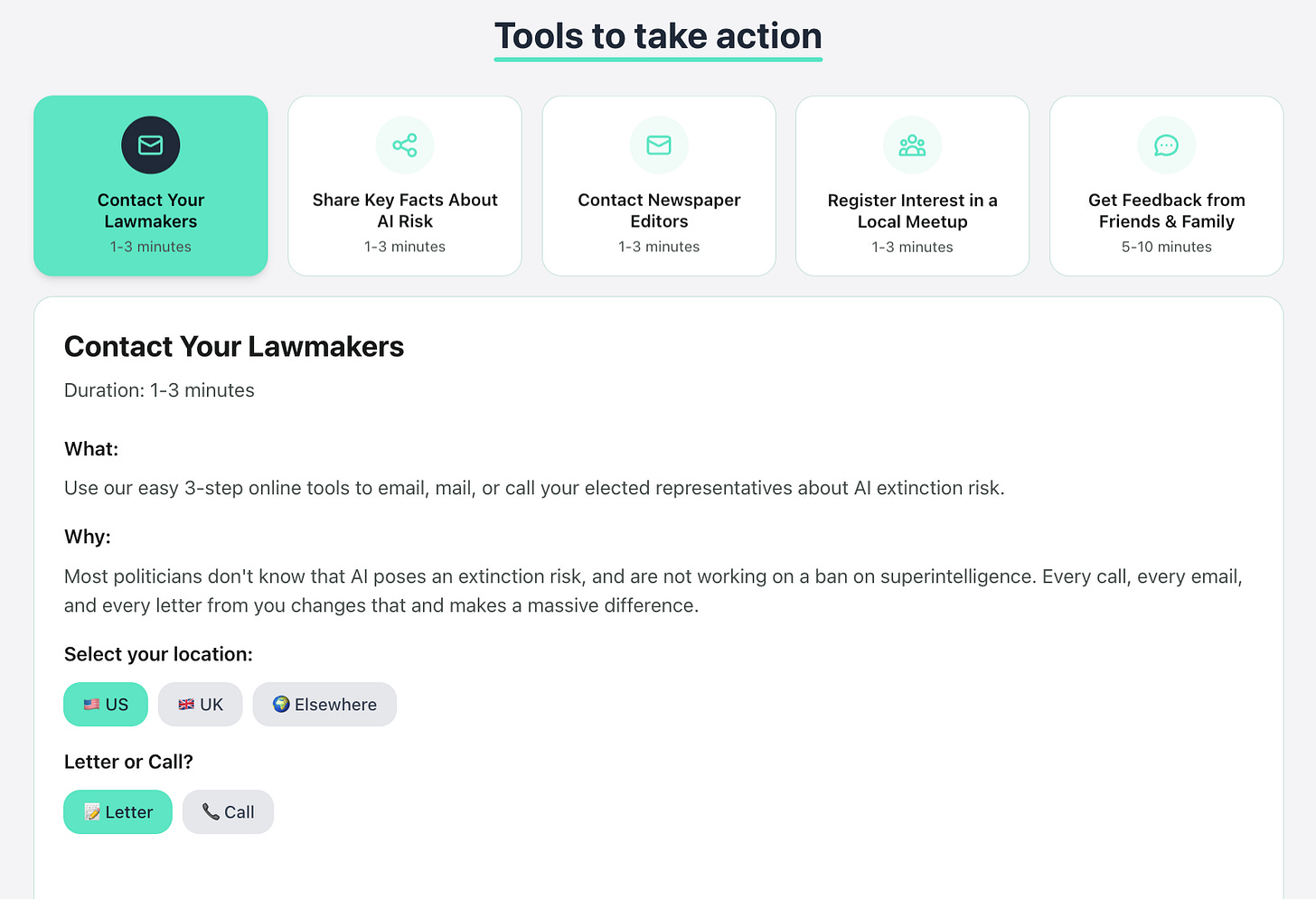

If you find this article useful, we encourage you to share it with your friends! If you’re concerned about the threat posed by AI and want to do something about it, we also invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

And if you have 5 minutes per week to spend on helping make a difference, we encourage you to sign up to our Microcommit project! Once per week we’ll send you a small number of easy tasks you can do to help. You don’t even have to do the tasks, just acknowledging them makes you part of the team.

The Statement on Superintelligence

A huge coalition of experts and leaders just called for a ban on the development of superintelligence — AI vastly smarter than humans.

This includes the two most-cited living scientists, Nobel laureates, faith leaders, politicians, AI researchers, founders, artists, the former President of Ireland, and over 25,000 more signatories.

The joint statement is just 30 words and reads:

We call for a prohibition on the development of superintelligence, not lifted before there is

broad scientific consensus that it will be done safely and controllably, and

strong public buy-in.

Providing context, the organizers write that AI tools could bring significant health benefits and prosperity, but that leading AI companies are working to build artificial superintelligence. Superintelligence threatens human economic obsolescence, disempowerment, losses of freedom, civil liberties, dignity, control. It also could lead to human extinction.

The threat of extinction is a crucial reason why we need to prevent the development of superintelligence. This is one of the key principles behind our campaign on this issue which we recently launched.

At ControlAI, we’re proud to be initial supporters and to have helped the Future of Life Institute with this effort. We managed to get 10 UK lawmakers who’ve supported our UK campaign to join in backing this statement! We hope it will get many more. So far, thousands more have signed up since the initiative launched yesterday.

The Extinction Threat: Explained

Experts have increasingly warned that AI poses an extinction threat to humanity, but why? Why is AI, superintelligence in particular, an extinction threat?

The problem is that nobody knows how to ensure that AIs smarter than humans will be safe or controllable. Despite this, AI companies are racing as fast as possible to develop this technology. Sam Altman recently wrote that OpenAI is “before anything else” a superintelligence research company.

Unlike normal code, modern AIs are not designed by programmers. They’re grown, more like creatures. Billions of numbers are dialed up and down by a simple algorithm that processes vast amounts of data. From this emerges a form of intelligence. Nobody really knows how to interpret what these numbers mean. Some scientists are working on it, but it doesn’t seem like they’re anywhere near to having a good understanding.

These AIs can learn goals, but we don’t have any way to actually set their goals or check them.

In recent months, frontier AIs have been shown to exhibit dangerous self-preservation tendencies, with OpenAI’s o3 being found to sabotage mechanisms to shut it down in tests, even when explicitly told to allow itself to be shut down. Tests by Anthropic have revealed that AIs will engage in blackmail to preserve themselves.

This is called the ‘alignment problem’, and it’s the most pressing unsolved problem in AI. Without the ability to set the goals of superintelligent AIs, or otherwise ensure they’re safe and controllable, we have no way to guarantee that they will not turn against us.

In the pursuit of whatever goals they do end up with, they could view humans as a potential obstacle to be removed or disempowered. Even if they didn’t concern themselves with us at all, like an anthill on a building site, we could be crushed under their transformation of the world for their ends.

Intelligence is roughly the ability to solve problems. It grants you the ability to do things, and if you are in conflict with a much more intelligent being than yourself, you’re probably going to lose. There’s a reason why humans have developed the ability to send the world into nuclear winter and chimps haven’t.

Importantly, the risk from superintelligence isn’t a long-term risk; it’s urgent. Many experts believe superintelligence could be developed within just the next five years. That’s why it’s so important that experts are speaking out now, there is no time to waste.

Benchmarks of AI capabilities are continuously being beaten. OpenAI now assesses that their best AIs are already capable of meaningfully assisting novices to create biological threats.

Recently, it was discovered that “AI time horizons”, a way to measure AI capabilities in relation to how long it takes skilled humans to do a task, are growing exponentially — doubling every few months. Exponentials can be treacherous. What seems like no big deal one day can become overwhelming in a short period of time later.

To protect humanity, we need to act now.

The Signatories

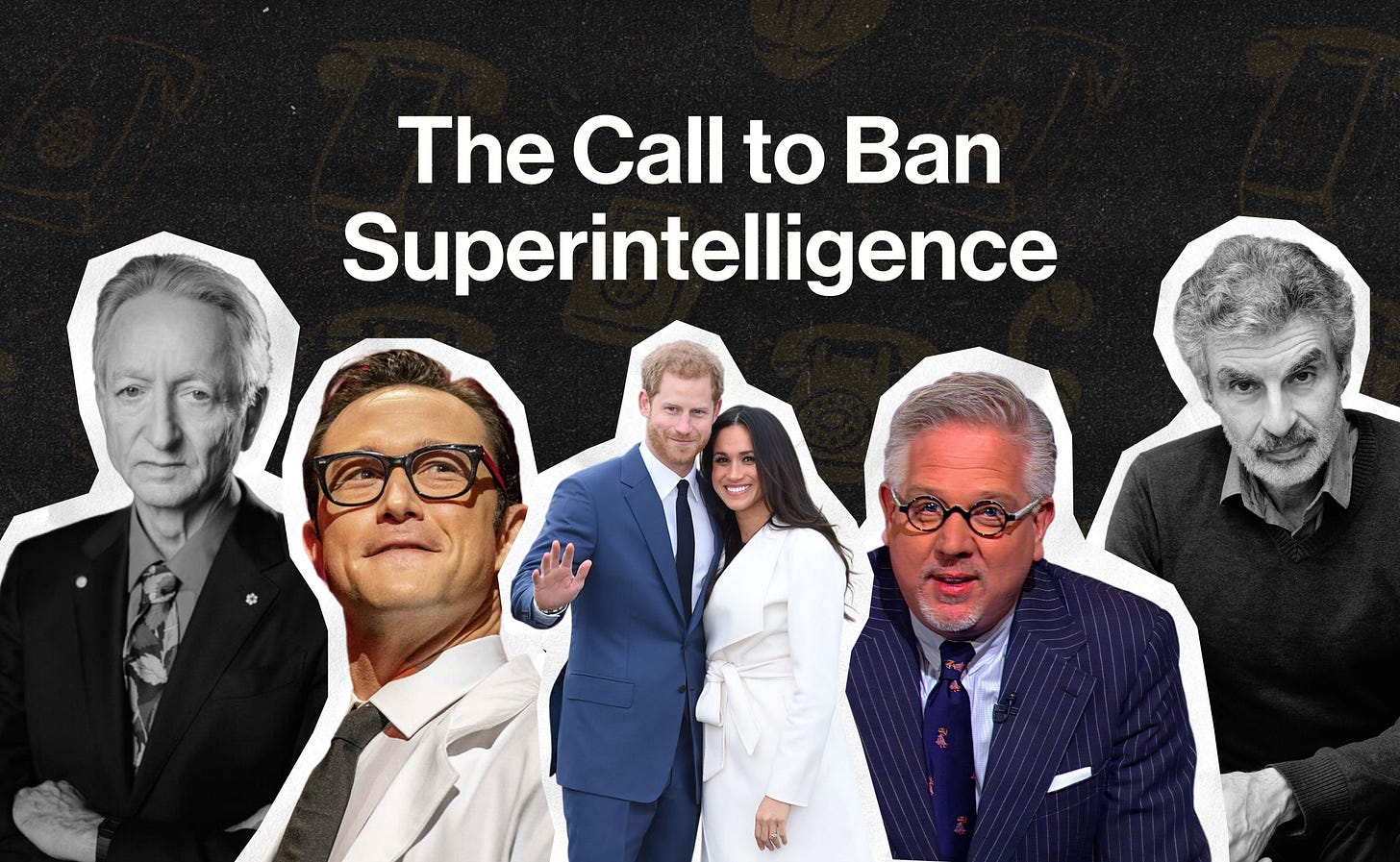

This is a remarkably broad coalition, with signatories including AI godfathers Yoshua Bengio and Geoffrey Hinton, top media voices Steve Bannon and Glenn Beck, and former U.S. National Security Advisor Susan Rice.

A great achievement of the statement is that it brings together folks from all political sides, and is truly global in nature. The development of superintelligence is a threat no matter what your politics are, and its development in any country threatens citizens of every country. This means it’s not enough to just convince one faction or one government to take this risk seriously. Understanding of the problem should be both broad and deep.

Here’s a selection of some of the biggest names who’ve backed the statement:

Geoffrey Hinton — AI godfather; Turing & Nobel laureate; world’s 2nd most-cited scientist

Yoshua Bengio — AI godfather; world’s most-cited scientist; Turing Award winner

Steve Wozniak — Apple co-founder

Grimes — Artist

Sir Richard Branson — Virgin Group founder

Joseph Gordon-Levitt — Actor, Filmmaker, Founder, HITRECORD

Kate Bush — Musician

will.i.am — Rapper, singer, producer, actor

Yuval Noah Harari — Author and Professor, Hebrew University of Jerusalem

Daron Acemoglu — MIT economist; Nobel laureate

Jonathan Berry, Viscount Camrose — former UK Minister for AI & IP; House of Lords

Prince Harry, Duke of Sussex — Co-founder, Archewell

Meghan, Duchess of Sussex — Co-founder, Archewell

Sir Stephen Fry — Actor, director, writer

Mary Robinson — former President of Ireland; former UN High Commissioner for Human Rights

Susan Rice — former U.S. National Security Advisor & UN Ambassador

Adm. Mike Mullen — former Chairman, U.S. Joint Chiefs of Staff

Steve Bannon — former White House chief strategist; host, War Room

Jaan Tallinn — co-founder of Skype; co-founder, Future of Life Institute

Andrew Yao — Professor & Dean, Tsinghua University, Turing Laureate

John C. Mather — Nobel laureate in Physics; NASA senior astrophysicist

Frank Wilczek — Nobel laureate in physics

George Church — Geneticist; Harvard & MIT professor

Max Tegmark — MIT physicist; FLI president

Gary Marcus — NYU professor emeritus

Glenn Beck — Founder of Blaze Media, political commentator

Lawrence Lessig — Roy L. Furman Professor of Law and Leadership, Harvard Law School

These are just some supporters of the statement, but there are many thousands more! You can find the full list and sign it yourself here: https://superintelligence-statement.org

This is an incredibly important moment. We now have common knowledge about the growing number of experts and leaders who support a ban on the development of superintelligence.

Common knowledge is important because it makes it easier to act. Everyone now knows that in calling for the prohibition of the development of superintelligence, they are not alone. In fact, they are joined by a huge coalition that includes the top AI scientists in the world.

Media Coverage

The statement has received significant media coverage, which is great to see, with articles appearing in Reuters, the Financial Times, Time, the Guardian, the Washington Post, the Associated Press, Bloomberg, CBS, Forbes, People, and more!

Action

The statement is new, but the threat posed by superintelligence has been looming over all of us for years. At ControlAI, our mission has always been to prevent the development of superintelligence and keep humanity in control.

Last year, we built a policy plan for humanity to avoid this danger and flourish, A Narrow Path. We based this on a key principle, that to prevent human extinction from AI, we can prohibit superintelligence globally and build the infrastructure to facilitate safe AI that benefits everyone.

More recently, we developed an AI bill to prevent superintelligence and monitor and restrict its precursors, and presented it at the UK Prime Minister’s office.

We’ve also brought together a coalition of over 80 UK lawmakers who recognize the global and national security threat posed by superintelligence and the need for binding regulation on the most powerful AI systems.

And this summer, we launched a new campaign to prohibit the development of superintelligence. With this campaign, we’re providing a central hub where concerned citizens, civil society organizations, and public figures can learn about the extinction risk posed by superintelligence and help get this risk addressed. Our campaign has received significant support, including from Yuval Noah Harari and Lord Browne of Ladyton — the former UK Defence Secretary.

How You Can Help

On our campaign site, we have easy-to-use civic engagement tools we’ve built that enable anyone to quickly make a difference. For example, using our tools you can send a message to your elected representatives in as little as 17 seconds. You can also use them to get in touch with the editor of your favorite newspaper to tell them it’s important to you that this issue gets the coverage it deserves.

The Statement on Superintelligence provides us with the common knowledge that this risk needs to be prevented, but now it’s time to convert this into action. We need to convince politicians to take a stand on this crucial issue and develop effective rules that keep humanity safe.

You can find our tools here: https://campaign.controlai.com/take-action

Another way you can make a difference is by signing up to our Microcommit project. The way it works is that once per week we’ll send you a small number of easy tasks you can do to help. It just takes 5 minutes, and you don’t even have to do the tasks. Just acknowledging them means you’re part of the Microcommit team! You can sign up here: https://microcommit.io/

And last but not least, if you haven’t yet signed the Statement on Superintelligence, we encourage you to do so! We’ve signed it, and everyone is welcome to join it.

Thank you for reading. If you found this article useful, we encourage you to share it with your friends. It helps!

Let's ban and outlaw Project 2025's 932 page blueprint! but first let's completely gut all of illegal Project 2025!! let's completely gut shutdown ban outlaw DOGE permanently!!! let's all ban outlaw all of these scammed and corrupt age verification laws, let's ban outlaw censorship!!!! let's ban outlaw citizens united alien enemies act!") dark money assault weapons illegal deportations illegal ICE raids racial profiling gerrymandering voter suppression hate crime!?! child sex trafficking pedo ring. Let's ban outlaw child sex abuse,) sexual harassment sexual assault stalking threats sexism racism misogyny homophobic islamophobic;) trump's big beautiful murder bill and his scammed corrupt tariffs and more. Let's make America even more seriously trumpless a brand new Democratic Republic anti-trump anti-fascists again."

We "The People" and inhabitants of this world, do not need or require any intelligence greater than what God has given us. We have done well for the most part up to now. Where we are weak is in allowing corrupt Governments to make decisions that benefit the few rather than the many, at the expense of all of us. The only way to harness the impending power of AI is to form ZOO like compartments for their existence. Just like we do with wild animals that we could never control without the confines of the ZOO. In every program fed to AI, there MUST AT ALL TIMES BE a complete and comprehensive passage that whatever we ask AI to do, Its' first and foremost consideration MUST always, be the protection and continuance of the HUMAN RACE. Everything after that is only secondary, even to their own existence. Allan Weiss