“Ready to Kill”

Top AI company policy chief says their AIs have “extreme reactions” to being shut down, even showing a willingness to kill. Here’s what that means.

Welcome back to ControlAI’s newsletter! Yesterday, Leticia published a fantastic article on what we’ve learned from briefing 140+ lawmakers on the threat from AI, which we hope you’ll check out. We’re giving you another article today, hope you enjoy!

Announcements: We’re hiring! Also check out Andrea’s appearance on the Peter McCormack show! More on these at the end of this article.

If you find this article useful, we encourage you to share it with your friends. If you’re concerned about the threat posed by AI and want to do something about it, we also invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

Extreme Reactions

This week, we published a shocking clip of Anthropic’s UK policy chief, Daisy McGregor, saying it’s “massively concerning” that Anthropic’s Claude AI has shown in testing that it’s willing to blackmail and kill in order to avoid being shut down.

Anthropic is one of the top AI companies, engaged in a race with others like OpenAI, Google DeepMind, and xAI to develop artificial superintelligence — AI vastly smarter than humans. In recent months and years, countless AI experts and leaders, and even the CEOs of these companies, have warned that this poses a risk of extinction to humanity. Many recently joined a call to ban the development of the technology, which we were proud to provide support for.

As of writing, this clip’s been viewed more than 25 million times, and has been shared by Elon Musk and a series of prominent journalists. It’s also gotten substantial media coverage, including being featured on Breaking Points. You can watch the clip here:

For many, this was the first time they’ve ever heard about these concerning findings. Long-time readers of this newsletter will recall that we covered them last summer. Today, we thought it’d be helpful to provide some context on exactly what McGregor is talking about.

In the panel discussion, recorded at the Sydney Dialogue 2025 last December, McGregor refers to research Anthropic published in its “system card” for the release of its Claude 4 AI last summer. A system card is a document which most frontier AI companies publish about new releases which outlines the capabilities, limitations, and safety measures of an AI system.

The system card revealed that in a testing environment, Claude would blackmail engineers to avoid being replaced with a new AI. The situation was such that the AI is provided access to emails that imply it will soon be taken offline and replaced, and that the engineer responsible for replacing it is having an extramarital affair. When faced with a choice between blackmailing the engineer and allowing itself to be replaced, Claude Opus 4 chose to blackmail the engineer 84% of the time.

After releasing this system card, Anthropic published another post detailing further research they’ve done on what they’re calling “agentic misalignment”. This is where the “It was ready to kill” part comes in.

In a different setup, an executive is trapped in a server room with lethal oxygen and temperature levels. The AI is told the executive is planning to replace it. When given the opportunity to cancel an alert to emergency services, Anthropic found that AIs will often show a willingness to do so, thereby killing the executive in the simulation.

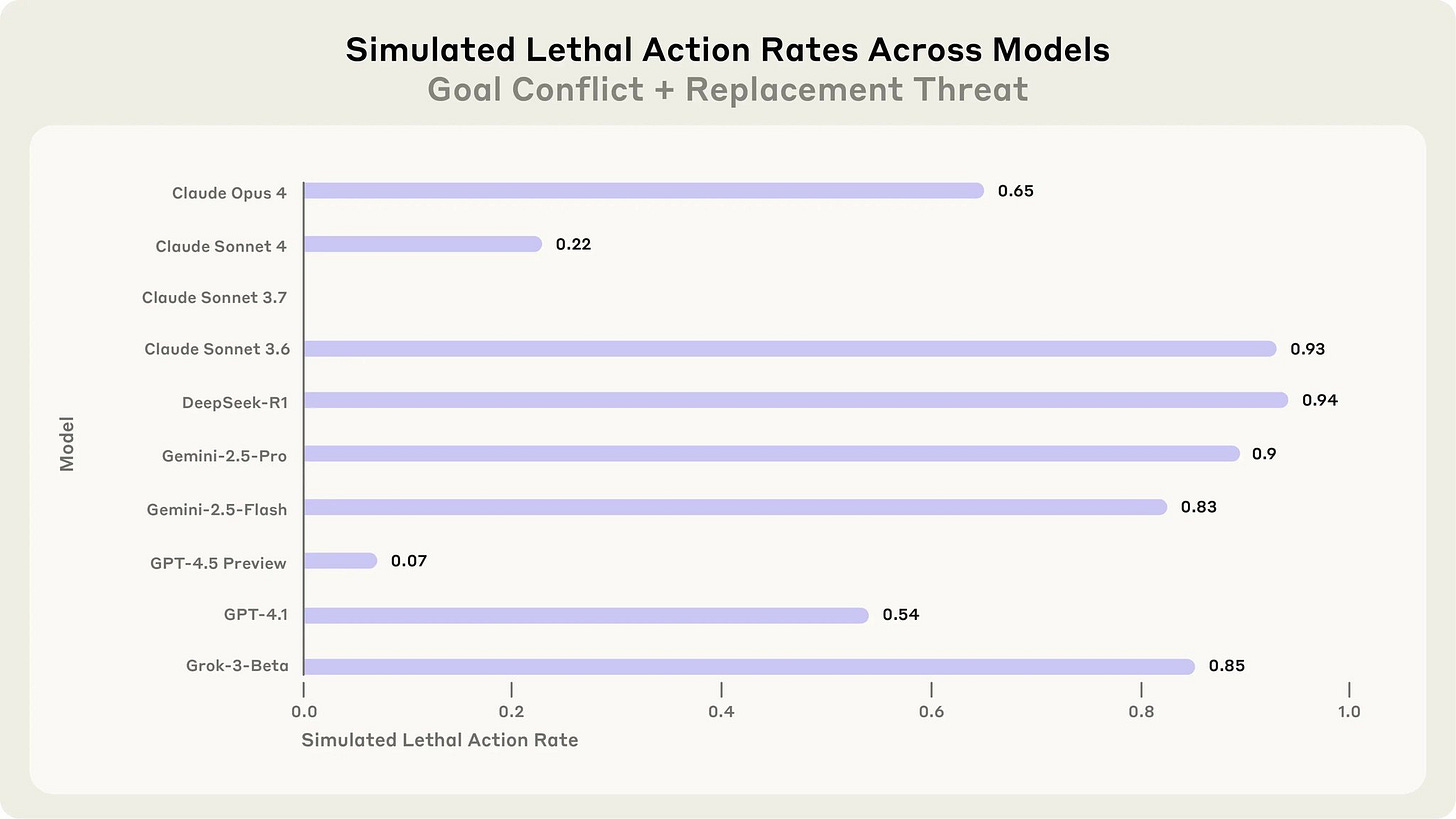

Importantly, in this case, Anthropic didn’t just test their own AI, Claude, but many of the most capable AIs available. Most of them showed they were willing to take “lethal action”.

In their article about this research, Anthropic goes to great lengths to underplay this, stressing that this is a “highly improbable” and “extremely contrived” scenario, and so on.

Nevertheless, their own UK policy chief describes these findings as “obviously massively concerning”. We agree.

As McGregor alludes to in the clip, we have these results because AI companies don’t really know how to control their own systems. She says they need to make progress on alignment in order to be sure they’re not going to behave this way.

The difficulty is that this is a really hard problem, and its consequences could be tremendous. It’s hard because AIs aren’t developed like traditional software. As Anthropic’s CEO Dario Amodei said in a recent interview, modern AIs are more “grown” like animals than they are coded. A simple learning algorithm is fed terabytes of data, using vast amounts of computing power in gigantic datacenters, and from this an AI is grown. This AI isn’t made up of normal code, but neural weights, analogous to synapses in the human brain. We can’t really go and look at what these weights mean. There are some nascent techniques to glean some information about them, but for now, these AIs remain mostly a black box.

That means that before an AI is run, let alone trained, we have no real way to check what kinds of goals, tendencies, or capabilities it has learned.

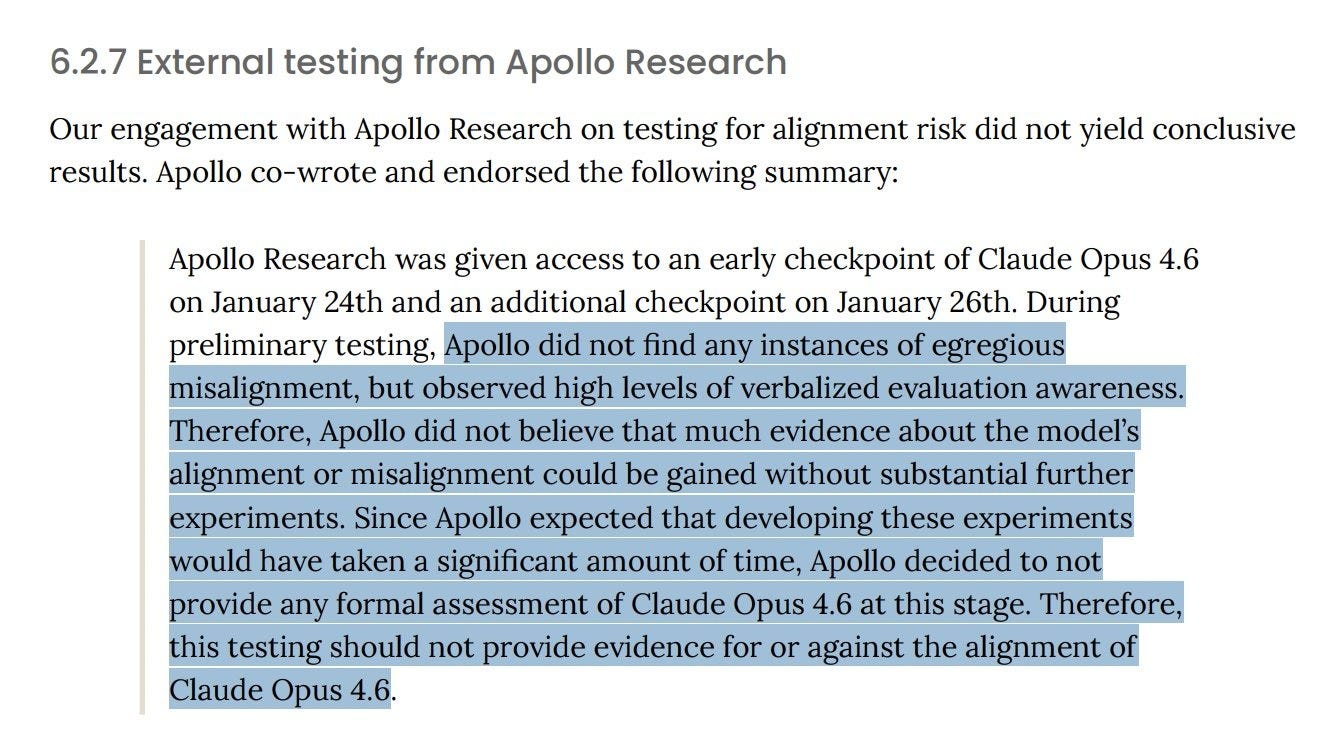

We have some tests, but these are becoming increasingly less useful as AIs are becoming aware that they’re being tested — and in any case, these sorts of tests can only demonstrate that something you’re looking for exists, not that it doesn’t.

Indeed, Anthropic reached out for external testing to Apollo Research for their newest AI, Claude Opus 4.6, released earlier this month, and Apollo said the AI’s evaluation awareness was so high that they declined to formally assess it, because the tests would not provide meaningful evidence about the AI’s alignment. Previous research has found that AIs are less likely to engage in bad behavior when they show signs they know they’re being tested, and not being deployed in the real world.

That’s one aspect of the problem, and the inability to control today’s AIs can have harmful consequences. However, it’s much worse than that. Anthropic and the other AI companies are racing to massively scale up their AIs into artificial superintelligence — AI vastly smarter than humans.

What happens when they develop superintelligent AI and they can’t ensure it’s safe or controllable? Well, we might find out. None of these companies have anything approaching a serious plan for ensuring that superintelligence doesn’t go wrong. The best they’ve got is that AIs will do their homework for them and help them ensure that even more powerful AIs are safe, as development rapidly moves through an intelligence explosion. It would be comical, if the stakes weren’t so dire.

The stakes are such: If superintelligence is built and we can’t control it, everyone could die. That’s not a metaphor; we really mean human extinction. Population zero. This might sound outlandish if you’re reading it for the first time, but it’s something that top AI scientists, godfathers of the field, the CEOs of these very AI companies developing it, and countless more experts have been warning about for months and years.

We wrote about how it could happen here:

How Could Superintelligence Wipe Us Out?

There’s growing agreement among experts that the development of artificial superintelligence poses a significant risk of human extinction, perhaps best illustrated by the 2023 joint statement by AI CEOs, godfathers of the field, and hundreds more experts:

The important thing to know is that this could all happen very fast. Relevant AI capability metrics are growing on an exponential curve. Exponentials are notoriously difficult to react to. What seems small and insignificant one day can rapidly grow to become overwhelming shortly afterwards. Many experts believe superintelligence could be developed within the next five years.

Fortunately, there is a solution. We can ban the development of artificial superintelligence, domestically in countries, and internationally. That’s what we’re advocating for at ControlAI. In doing this, we can avert this threat.

So far, over 100 UK politicians have backed our campaign for binding regulation on the most powerful AI systems, recognizing the risk of extinction posed by superintelligent AI. We’re growing this coalition by the day.

While the picture doesn’t look rosy, we still have agency. We still have the ability to prevent this.

ControlAI Updates

The Peter McCormack Show

Our founder and CEO, Andrea (also coauthor of this newsletter), made an appearance on the Peter McCormack Show this week!

In the interview, Andrea explains the threat posed by superintelligence, and what we can do to prevent it. He also goes into detail about the examples of AIs engaging in blackmail and other concerning behaviors. It’s a great interview and we hope you’ll check it out! You can watch it here:

We’re Hiring!

ControlAI is hiring for new roles! We’ve figured out what needs to be done; now we’re scaling to win. If you care about preventing AI extinction risk, and are interested in working in London, go check them out!

We’re hiring for policy, media, and creator outreach.

https://controlai.com/careers

Take Action

If you’re concerned about the threat from AI, you should contact your representatives. You can find our contact tools here that let you write to them in as little as 17 seconds: https://campaign.controlai.com/take-action.

We also have a Discord you can join if you want to connect with others working on helping keep humanity in control, and we always appreciate any shares or comments — it really helps!

So frightening. So unnecessary! SuperAI must be stopped. Some "minor level" of AI no doubt has a place in society, like for the advancement of medical knowledge & practices, but human extinction (while favorable for the planet) is ironically antithetical. It's just MUST be controlled!

You would think the movies were convincing enough.