AI in 2026: What Comes Next

What experts and AI developers are saying about the future.

January is often a time for people to reflect and plan for the future. Last year, we collected a series of AI predictions by industry leaders, insiders, and experts. This year, we thought we’d do something similar!

If you find this article useful, we encourage you to share it with your friends! If you’re concerned about the threat posed by AI and want to do something about it, we also invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

Table of Contents

Sam Altman

In his “gentle singularity” blog post in June, the OpenAI CEO predicted that AIs this year will be able to figure out “novel insights”. That refers to the ability of AIs to come up with new hypotheses, ideas, or solutions, going beyond current human knowledge.

2025 has seen the arrival of agents that can do real cognitive work; writing computer code will never be the same. 2026 will likely see the arrival of systems that can figure out novel insights. 2027 may see the arrival of robots that can do tasks in the real world.

The blog post also confirmed that OpenAI is focusing on developing artificial superintelligence — AI vastly smarter than humans — a technology that countless experts and AI CEOs, including Altman himself, have warned could lead to human extinction.

Altman has also said he thinks that the next big breakthrough in AI will be when AIs gain “infinite, perfect memory”.

In October, Altman said that OpenAI has set a goal to develop an intern-level AI research assistant by September 2026, and a “legitimate” AI researcher by 2028. In other words, OpenAI is focusing on automating the process of AI research, the work that goes into developing more powerful AIs. This is an incredibly dangerous goal to pursue, and could initiate an uncontrollable intelligence explosion. We wrote more about this here.

In the same month, he also restated his belief that the development of superintelligence is the greatest threat to the existence of mankind.

Dario Amodei

In May, Dario Amodei, the CEO of another one of the largest AI companies, Anthropic, told Axios that AI could wipe out half of all entry-level white-collar jobs, spiking unemployment to 10-20%, within one to five years. In November, he confirmed this prediction in an interview with 60 Minutes’ Anderson Cooper. In other words, he thinks there’s a chance that could happen as early as sometime this year.

Job losses aren’t the same as the risk of losing control to superintelligent AIs, which is what we’re focused on preventing at ControlAI, but the ability for AIs to be significantly economically useful does demonstrate how rapidly development is progressing. Unfortunately, progress in our ability to understand AIs or towards ensuring that smarter-than-human AIs will be safe or controllable is not moving nearly as fast.

In September, Dario Amodei said that he thought there was a 25% chance that AI goes “really, really badly” in response to a question about his “p(doom)”, an estimate of the chances of AI destroying humanity.

Elon Musk

According to a report in Business Insider, Musk said in an xAI all-hands meeting that Artificial General Intelligence could be achieved this year. He went on to restate this publicly in a “Moonshots with Peter Diamandis” podcast episode published earlier this month.

I think we’ll hit AGI next year in ‘26.

He also said he’s confident that by 2030 AI will exceed the intelligence of all humans combined, which is one of the definitions of artificial superintelligence.

Musk has often warned that the development of artificial superintelligence could lead to human extinction, though like OpenAI, Anthropic, and others, his company is racing to build it.

Demis Hassabis

Google DeepMind CEO Demis Hassabis doesn’t expect superhuman AI to arrive as soon, telling WIRED last May that it might take 5 to 10 years for machines to surpass humans in all domains, adding: “That’s still quite imminent in the grand scheme of things … But it’s not tomorrow or next year.”

However, he has outlined a very concrete milestone this year, which is that he says his company will establish its first automated laboratory in 2026, fully integrated with Google’s Gemini AI. He writes that the lab will focus on materials science research.

Other AI CEOs

Jensen Huang, the CEO of Nvidia, the company that designs much of the AI chips used to train and run AIs, has been predicting the “ChatGPT moment for robotics”, saying it’s “just around the corner”, though not specifying a concrete timeline.

Microsoft CEO Satya Nadella has framed 2026 as significant for the diffusion of the technology, writing about a “model overhang” where AI capability gains are outpacing the ability of industry to deploy it.

AI Futures

This one isn’t a forecast specific to 2026, but it’s significant as some of the best researched work on forecasting the trajectory of AI development.

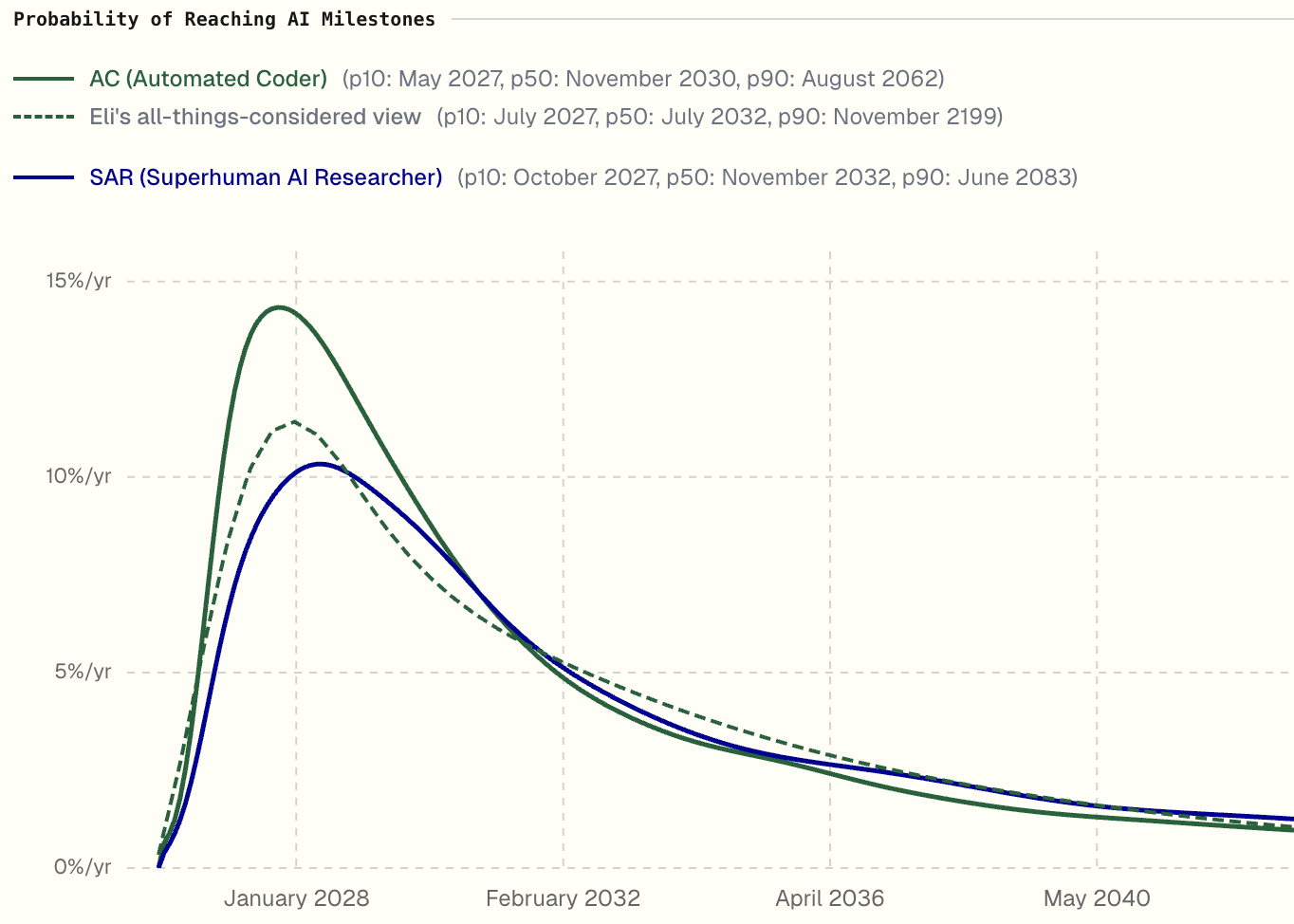

AI Futures Project, the authors of the scenario-forecast AI 2027, recently published an update to their modeling of AI timelines and takeoff (when different levels of capability will be achieved, and how rapidly things will accelerate).

Lead author of the updated model, Eli Lifland, has the most likely periods of Automated Coders and Superhuman AI Researchers being developed (modal estimates) around the end of 2027 and mid 2028, respectively — further estimating that artificial superintelligence would probably be developed within 2.5 years of Automated Coders.

AI Futures defines Automated Coders as when an AI can fully automate an AI project’s coding work, while Superhuman AI Researchers are when an AI can fully automate AI R&D, with “research taste” matching the top human researcher.

Lifland’s median estimates for when these milestones will be passed are a few years later, which refers to when he estimates it is likely to have happened by, not just when the most likely moment is.

Overall this represents a small shift in the AI 2027 authors’ views towards there being a bit more time before very powerful AIs are developed — though it is still worrying little.

Note that some commentators have confused what the title of AI 2027 represented (the most likely year for these milestones to be passed) with a median forecast, contrasting that with AI Futures’ updated medians, and so representing this shift as much larger than it really is.

When we published AI 2027, we thought 2027 was one of the most likely years AGI would arrive. But it was not our **median** forecast, those ranged among authors from 2028-2035. Now our medians have moved back a bit, but our most likely year is still ~2028.

You can read our initial coverage of AI 2027 here, or our interview with Eli Lifland here:

Special Edition: The Future of AI and Humanity, with Eli Lifland

Welcome to the ControlAI newsletter! For our first ever interview, we sat down with Eli Lifland to learn about the future of AI. Eli is a coauthor of the recently published AI 2027 scenario forecast, which we covered last week. Eli’s an expert forecaster, and ranks #1 on the

More Predictions

AI expert Gary Marcus predicts that AGI won’t be developed in 2026, and that no country will take a decisive lead in an AI “race”.

The risk of large-scale AI-assisted cyberattacks appears to be increasing. In late November, the expert forecasting team Sentinel Global Risks Watch estimated:

In light of increasing AI capabilities and last week’s Anthropic warning about AI-orchestrated cyber espionage, forecasters believe there’s a 51% chance (45% to 60%) that there will be an AI-assisted cyberattack causing at least $1 billion in damages over the next three months, slightly up from a 44% chance (37% to 50%) in week 35 of this year.

More recently, Sentinel estimated that there was a 44% chance a major AI company would have an IPO in 2026, and that there was a 60% chance that the US would have federal or state AI regulation in force at the end of the year which requires companies to “publish and follow plans for mitigating the risk that their AIs might cause catastrophic damages”. Currently, California does require this.

Conclusion

A range of predictions have been made about what will happen this year and beyond. The future is inherently uncertain, but one thing is clear. AI capabilities are advancing rapidly across the board.

As we reported last week, the UK’s AI Security Institute, which tests and studies advanced AIs to understand and reduce safety risks, recently published a major report in which it prominently stated:

AI capabilities are improving rapidly across all tested domains. Performance in some areas is doubling every eight months, and expert baselines are being surpassed rapidly.

What’s also clear is that despite the rapid approach of superintelligent AI, nobody knows how to ensure that such systems will be safe and controllable. OpenAI itself admitted this in a recent blog post:

Obviously, no one should deploy superintelligent systems without being able to robustly align and control them, and this requires more technical work.[emphasis added]

We’d also highlight that just a couple of weeks ago, David “davidad” Dalrymple, one of the world’s leading AI experts and a programme director at the UK’s Advanced Research and Invention Agency, told the Guardian that things are moving really fast and we might not have time to get ahead of it in terms of safety.

Take Action

If you find this article useful, we encourage you to share it with your friends! If you’re concerned about the threat posed by AI and want to do something about it, we also invite you to contact your lawmakers. We have tools that enable you to do this in as little as 17 seconds.

And if you have 5 minutes per week to spend on helping make a difference, we encourage you to sign up to our Microcommit project! Once per week we’ll send you a small number of easy tasks you can do to help. You don’t even have to do the tasks, just acknowledging them makes you part of the team.

We also have a Discord you can join if you want to connect with others working on helping keep humanity in control.

AI is moving way to fast, it’s dangerous and doesn’t have a heart of human beings, people need to stop the manipulations of these rich people from making way to much money and these AI plants are so bad for the earth!

Excellent compilation of the 'Software Timelines.' It is fascinating to contrast these predictions (Musk/Altman predicting 2026) with the 'Hardware Timelines' I track.

Yesterday, TSMC’s CEO C.C. Wei explicitly stated that advanced chip supply is physically constrained until 2028/2029. Combine that with the 60-week lead times for high-voltage transformers on the US grid, and you realize we are hitting a hard physical wall.

While everyone looks to Congress for AI regulation, the ultimate regulator right now is Physics. You can't code your way around a copper shortage or a foundry backlog.

I track these physical bottlenecks at The Sovereign Pillar. The 'AI Explosion' is going to be a lot slower than the software CEOs think simply because the atoms can't move as fast as the bits.